What Are Artificial Neural Networks? A Complete Guide

Learn what Artificial Neural Networks are, how they work in Machine learning, their types, applications, advantages, and challenges explained in a simple way.

Artificial Neural Networks (ANNs) are one of the most important concepts behind modern Machine learning systems. From voice assistants and recommendation engines to medical diagnoses and self-driving cars, neural networks power many technologies we use daily. As data continues to grow rapidly, traditional programming methods struggle to adapt. This is where Machine learning, especially neural networks, plays a crucial role.

Artificial Neural Networks are inspired by the way the human brain processes information. They allow machines to learn from data, recognize patterns, and make decisions with minimal human intervention. For students, professionals, and beginners exploring Machine learning, understanding ANNs is a foundational step toward mastering artificial intelligence.

What Are Artificial Neural Networks?

Artificial Neural Networks are computational models designed to simulate how the human brain learns and processes information. In Machine learning, ANNs help systems learn from examples rather than follow fixed rules. An ANN consists of interconnected units called neurons, which process input data and produce output. Each neuron performs simple calculations, but together they can solve complex problems such as image recognition or language translation.

Key characteristics of Artificial Neural Networks

- Learn patterns from data

- Improve performance over time

- Handle complex and non-linear problems

- Adapt to new information

Unlike traditional algorithms, Machine learning models based on neural networks can identify hidden patterns without explicit programming.

Why Artificial Neural Networks Are Important in Machine Learning

Artificial Neural Networks are a core pillar of Machine learning because they enable systems to learn directly from data. They are especially useful when:

- Data is large and complex

- Rules are difficult to define manually

- Patterns are hidden or non-linear

ANNs form the foundation of deep learning, a powerful subset of Machine learning responsible for major breakthroughs in artificial intelligence.

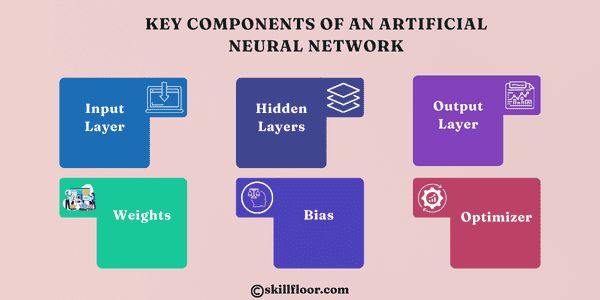

Key Components of an Artificial Neural Network

To understand how Artificial Neural Networks operate in Machine learning, it is essential to become familiar with their core components. Each part plays a specific role in helping the network learn from data, identify patterns, and make accurate predictions. When combined, these components enable neural networks to function as powerful learning systems.

1. Input Layer

The input layer is the first layer of an Artificial Neural Network. Its main role is to receive raw data and pass it to the network for processing. Each neuron in the input layer represents a single feature of the data.

For example, in an image recognition task, each input neuron may represent the pixel value of an image. In a dataset used for prediction, neurons may represent numerical values such as age, salary, or temperature. The input layer itself does not perform calculations; it simply delivers information to the hidden layers for further analysis in Machine learning models.

2. Hidden Layers

Hidden layers are where most of the learning happens. These layers perform complex mathematical computations and extract meaningful patterns from the input data. A neural network can have one hidden layer or multiple hidden layers, depending on the complexity of the task.

Each neuron in a hidden layer receives inputs from the previous layer, applies weights, adds a bias, and passes the result through an activation function. More hidden layers allow deeper learning, enabling the Machine learning model to recognize more advanced and abstract patterns.

3. Output Layer

The output layer is the final layer of the neural network and produces the final result or prediction. The type of output depends on the problem being solved.

For example, the output could be:

- A binary result such as yes or no

- A category such as spam or not spam

- A numerical value such as price or temperature

In Machine learning, the output layer plays a crucial role in decision-making and evaluation.

4. Weights

Weights determine how important each input feature is within the network. Every connection between neurons has an associated weight that influences how much impact the input has on the final output. During training, Machine learning algorithms adjust these weights to reduce errors. Stronger weights indicate more influential features, while weaker weights have less impact on predictions.

5. Bias

Bias is an additional parameter added to each neuron to help the model fit data more accurately. It allows the network to shift the activation function and make better predictions. By using bias, neural networks gain flexibility and are better able to model real-world data patterns, improving overall Machine learning performance.

6. Loss Function

The loss function measures how far the network’s predictions are from the actual results. It provides feedback on how well the model is performing. A smaller loss indicates better performance, while a higher loss signals the need for improvement. In Machine learning, minimizing the loss function is the primary goal during training.

7. Optimizer

The optimizer is responsible for updating the weights and bias to reduce the loss. It determines how the model learns from errors. Common optimizers include Gradient Descent and its variants. An effective optimizer helps the Machine learning model converge faster and achieve better accuracy.

Together, these components form the foundation of Artificial Neural Networks and enable Machine learning systems to learn efficiently, adapt to data, and solve complex problems.

Working of Artificial Neural Networks

The working of Artificial Neural Networks in Machine learning follows a systematic learning cycle that allows the model to learn from data and improve its predictions over time. Instead of giving fixed rules, the network gradually adjusts itself through repeated training until it achieves reliable accuracy. This learning process consists of several important steps.

Step 1: Forward Propagation

Forward propagation is the first stage of learning. In this step, input data is fed into the neural network through the input layer. Each input value is multiplied by its corresponding weight, and a bias is added.

The weighted values are then passed through activation functions in the hidden layers. These activation functions introduce non-linearity, enabling the Machine learning model to learn complex patterns. As data moves forward through each layer, calculations continue until the network produces an output at the final layer.

Step 2: Loss Calculation

Once the output is generated, the network evaluates how accurate the prediction is. The predicted result is compared with the actual or expected output.

This difference is measured using a loss function, which calculates the error value. The loss function provides feedback on how well the Machine learning model is performing. A lower loss means better accuracy, while a higher loss indicates the need for further improvement.

Step 3: Backpropagation

Backpropagation is the process that enables learning from mistakes. In this step, the error calculated by the loss function is sent backward through the network.

Each neuron determines how much it contributed to the error, and the network adjusts the weights accordingly. This backward flow of error information allows the Machine learning model to correct itself and learn more effectively.

Step 4: Weight Update

After backpropagation, the optimizer updates the weights and biases to reduce the loss. Optimization algorithms such as Gradient Descent control the size and direction of these updates. With each update, the model becomes more accurate. This entire cycle—forward propagation, loss calculation, backpropagation, and weight update—repeats multiple times until the Machine learning model achieves acceptable performance and reliable predictions.

This iterative learning process is what enables Artificial Neural Networks to improve continuously and solve complex real-world problems.

Types of Artificial Neural Networks

Different types of Artificial Neural Networks are used in Machine learning, depending on the nature of the data and the problem being solved. Each type is designed to handle specific tasks more efficiently, making neural networks highly adaptable across applications.

1. Feedforward Neural Network

A Feedforward Neural Network is the simplest form of an Artificial Neural Network. In this type, data moves in only one direction—from the input layer through the hidden layers to the output layer, without any loops or feedback.

These networks are commonly used for basic tasks such as classification and numerical prediction. Because of their straightforward structure, they are often the first neural network model introduced in Machine learning learning paths.

2. Convolutional Neural Network (CNN)

Convolutional Neural Networks are specifically designed to work with visual data such as images and videos. They use specialized filters, called convolutional layers, to automatically detect features like edges, shapes, and patterns.

CNNs reduce the need for manual feature extraction and are widely used in computer vision tasks. In Machine learning, CNNs power applications such as facial recognition, image classification, and object detection.

3. Recurrent Neural Network (RNN)

Recurrent Neural Networks are designed to process sequential data where order matters. Unlike feedforward networks, RNNs have connections that loop back, allowing them to retain information from previous inputs.

This memory feature makes RNNs useful in speech recognition, language translation, and text analysis. In Machine learning, RNNs help models understand context and sequence relationships.

4. Long Short-Term Memory (LSTM)

Long Short-Term Memory networks are a specialized type of RNN that solve the problem of long-term dependencies. Traditional RNNs struggle to remember information over long sequences, but LSTMs use memory cells and gates to retain important data.

LSTMs are widely used in Machine learning for time-series forecasting, speech processing, and natural language understanding.

5. Artificial Deep Neural Networks

Deep Neural Networks contain multiple hidden layers between the input and output layers. These layers enable the network to learn complex, high-level features from data. They form the foundation of deep learning and are capable of handling highly complex tasks. In Machine learning, deep neural networks are used in advanced applications such as autonomous systems, voice assistants, and recommendation engines.

Each type of Artificial Neural Network expands the capabilities of Machine learning, allowing models to solve a wide range of real-world problems efficiently and accurately.

Applications of Artificial Neural Networks

ANNs power many Machine learning applications:

- Healthcare: Disease detection, medical imaging, predictive diagnostics

- Finance: Fraud detection, risk assessment, algorithmic trading

- Marketing: Customer segmentation, recommendations, personalization

- Transportation: Autonomous vehicles, traffic forecasting

- Language Processing: Chatbots, voice assistants, translation

Their ability to learn from data makes them invaluable across industries.

Challenges in Artificial Neural Networks

Artificial Neural Networks play a vital role in Machine learning, but they also come with several challenges that affect performance, scalability, and real-world adoption. Understanding these limitations helps learners and practitioners design better models and set realistic expectations. Despite their power, neural networks require careful planning, resources, and expertise to work effectively.

Common Challenges Include:

- High Data Requirements: Neural networks need large amounts of quality data to train effectively, which is not always available.

- Computational Complexity: Training deep networks requires significant processing power, memory, and time.

- Overfitting Issues: Models may perform well on training data but fail on new, unseen data without proper regularization.

- Lack of Interpretability: Neural networks often act as “black boxes,” making it difficult to explain decisions.

- Hyperparameter Tuning: Selecting the right architecture, learning rate, and parameters can be complex and time-consuming.

- Sensitivity to Data Quality: Poor or biased data can lead to inaccurate predictions in Machine learning systems.

Future of Artificial Neural Networks

The future of Artificial Neural Networks in Machine learning is evolving rapidly with advancements in data, algorithms, and computing power. These developments are making neural networks more efficient, transparent, and widely usable. As industries adopt AI at scale, innovation in neural network design will continue to grow.

- Explainable AI: Focuses on making neural network decisions transparent and understandable.

- Energy-efficient neural networks: Reduces computational cost and environmental impact.

- Edge AI and on-device learning: Enables real-time processing with better privacy.

- Ethical and responsible Machine learning: Ensures fairness, accountability, and reduced bias.

Together, these trends will shape the next generation of intelligent systems.

Artificial Neural Networks are a core building block of modern Machine learning. Inspired by the human brain, they enable systems to learn from data, recognize patterns, and make intelligent decisions. By understanding their structure, working process, types, applications, and challenges, learners gain a strong foundation in artificial intelligence. Whether you are a student, professional, or beginner, mastering Artificial Neural Networks opens the door to advanced Machine learning concepts and real-world problem-solving. As technology continues to evolve, neural networks will remain at the heart of intelligent systems driving innovation across industries.