Introduction to Feature Engineering in Data Science

Learn how Feature Engineering in Data Science transforms raw data into clear, meaningful insights, boosting model accuracy, performance, and real-world results.

What happens if, no matter how often you modify your model, it never gets better? Many people pursue larger algorithms but ultimately fall short. Better features are the true secret, which is easier to understand. Models learn more quickly, operate more intelligently, and produce results you can rely on daily when your data is clear and helpful.

Even with the best model, poor features will still yield poor results. Conversely, even basic models can perform exceptionally well when they have strong features. This highlights the importance of feature engineering, which transforms raw data into something understandable, practical, and suitable for machine learning.

What Are Features?

Features are essentially the bits of information that a machine learning model utilizes to learn and make judgments in data science. Features can be thought of as the inputs you provide to the model or the columns in your dataset. Each feature explains a tiny portion of the issue you are attempting to resolve.

Features such as size, number of rooms, location, and age can be used to predict home prices. These can include time spent, money spent, or user behavior. Recommendations may be based on what consumers watched, enjoyed, or stayed for.

Real-World Examples of Features

Let’s make this very practical:

1.House Price Prediction

Features could be:

-

Size of the house

-

Number of bedrooms

-

Location

The model looks at these features and learns how they relate to the final price.

2. Fraud Detection

Features could be:

-

Transaction amount

-

Time of purchase

-

Country of the user

These features help the model learn what looks normal and what looks risky.

3. Recommendation Systems (like Netflix or Amazon)

Features could be:

-

What you watched or bought before

-

How long you watched something

-

What other similar users liked

These features help the system guess what you might like next.

So… What Exactly Is Feature Engineering?

The process of transforming unprocessed data into something that a model can comprehend is known as feature engineering. Feature engineering in data science, which shapes how numbers convey a story, is at the center of many successful projects. It aids models in seeing trends, making wiser decisions, and avoiding confusion caused by disorganized data.

Even the most intelligent model would be useless without it. Clear thinking, straightforward procedures, and minor adjustments that provide significant outcomes are the hallmarks of feature work. You refine, shape, and test concepts until the data eventually communicates in a machine-readable manner.

It involves:

-

Cleaning features

-

Transforming features

-

Creating new features

-

Selecting the most useful features

Why Feature Engineering is Important

The foundation of successful data science initiatives is feature engineering, which turns unprocessed data into insightful knowledge that enhances model accuracy, dependability, and interpretability.

-

Improves Model Accuracy: Increased predicted accuracy and more dependable real-world outcomes across datasets are the results of models capturing patterns more successfully thanks to improved features.

-

Reduces Model Complexity: The learning task is made easier by well-designed features, which enable models to function well without the need for excessively complicated methods or substantial processing.

-

Handles Real-World Data: In order for machine learning algorithms to use and comprehend chaotic real-world data, feature engineering cleans, transforms, and organizes it.

-

Prevents Overfitting Issues: In order to prevent models from memorizing noise and performing badly on fresh data, careful feature selection guarantees that models concentrate on significant patterns.

-

Encodes Domain Knowledge: Features developed using domain insights bridge the gap between machine learning and business expertise by improving models' comprehension of the issue.

-

Speeds Up Model Training: Good features enable models to train more quickly while increasing efficiency and stability in outcomes by reducing data noise and irrelevant information.

By concentrating on feature engineering, you can make sure that your models are in line with practical requirements, enhancing performance and assisting in the successful and efficient accomplishment of more general data science specifications.

Where Feature Engineering Fits in the ML Pipeline

At the core of the machine learning pipeline is feature engineering, which converts unprocessed data into useful inputs that optimize model learning and predictive performance.

-

Data Collection Stage: Feature engineering guarantees that the information gathered is relevant and useful for the intended machine learning activity. Raw data is received from many sources.

-

Data Cleaning Step: Cleaning addresses mistakes, inconsistencies, and missing information, providing a strong basis for precise feature engineering and model training.

-

Feature Creation Phase: By identifying patterns, trends, and connections in the data already in existence, new features are created that aid models in comprehending the issue.

-

Feature Transformation Step: Features are standardized and appropriate for machine learning algorithms through the use of mathematical transformations, categorical data encoding, and numerical scaling.

-

Feature Selection Process: To increase model accuracy and decrease complexity, duplicate or irrelevant features are eliminated, leaving only the most informative signals.

-

Model Training Integration: The model receives engineered features, which enable learning algorithms to efficiently identify patterns and maximize predictions for practical uses.

After data cleaning, you can make sure models learn from high-quality, structured data by carefully implementing feature engineering. This will improve predictions and assist in successful results in machine learning initiatives.

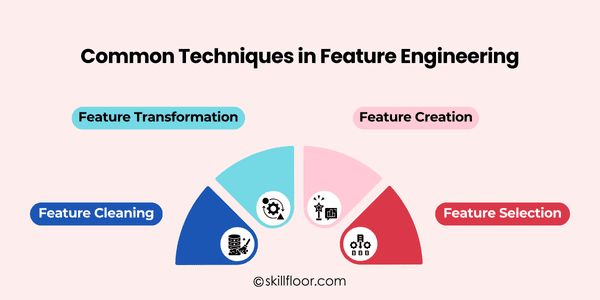

Common Techniques in Feature Engineering

Feature engineering is a collection of methods to improve the clarity, intelligence, and utility of your data for data science models. The majority of feature engineering techniques can be divided into four groups:

1. Feature Cleaning

Purpose: Resolve problems with your data so the model can learn correctly and without mistakes.

Why it matters: Models become confused by dirty or inconsistent data, which leads to erroneous predictions and unstable outcomes.

Key tasks:

-

Handling missing values (e.g., filling with mean, median, or mode)

-

Removing or treating outliers (extreme or unusual values)

-

Correcting inconsistencies (e.g., “USA” vs “United States”)

Imagine it as carefully cleaning and prepping raw ingredients before cooking.

2. Feature Transformation

Purpose: Transform unprocessed features into clearly comprehensible formats for models to enhance learning and enable accurate pattern recognition.

Why it matters: For accurate predictions and quicker convergence, the majority of machine learning models require numerical, scaled, or normalized input.

Key techniques:

-

Scaling: Standardization (Z-score) or normalization (Min-Max)

-

Encoding: Converting categories to numbers (One-Hot, Label Encoding)

-

Mathematical transformations: Log, square root, Box-Cox for skewed data

Consider carefully reworking clay; the substance doesn't change, but it becomes simpler to shape into practical shapes.

3. Feature Creation

Purpose: Create new features that help models learn more efficiently during the data preparation stage by exposing hidden patterns in the data.

Why it matters: Since relevant signals are frequently absent from raw data alone, developing new features enhances models and increases prediction accuracy.

Key techniques:

-

Combining existing features (e.g., BMI = weight / height²)

-

Extracting date/time features (e.g., weekday, month, is weekend)

-

Binning or bucketing continuous variables (e.g., age → young, adult, senior)

-

Polynomial or interaction features (e.g., x × y)

Consider it as creating new tools from pre-existing data, which facilitates the extraction of insights and the resolution of issues.

4. Feature Selection

Purpose: By eliminating unnecessary or duplicate elements, you can greatly increase the learning and efficiency of your model.

Why it matters: Fewer strong features are ideal since adding too many features might confuse models, impede training, and lower prediction accuracy.

Key techniques:

-

Filter methods: Correlation, variance thresholds

-

Wrapper methods: Forward selection, backward elimination, Recursive Feature Elimination (RFE)

-

Embedded methods: Lasso regression, tree-based feature importance

This is similar to carefully selecting the best ingredients for a meal to ensure less mess and better outcomes.

Feature Engineering for Different Data Types

Depending on the kind of data you are dealing with, different feature engineering strategies are used. To extract significant patterns for models, different methods are required for different types of data. Feature engineering can be used to the following six typical data types:

1. Numerical Data

-

To make all the numbers comparable, scale or normalize the values.

-

By combining, contrasting, or dividing the current numerical columns, you can create additional features.

2. Categorical Data

-

Use one-hot, label, or target encoding to translate categories into numerical values.

-

To cut down on noise, combine uncommon categories into a class called "Other."

3. Date and Time Data

-

Take the hour, day, weekday, month, and year out of timestamps.

-

To identify trends, make flags for holidays, weekends, and seasons.

4. Text Data

-

Utilize bag-of-words, TF-IDF, or embeddings to transform text into numerical characteristics.

-

Clean up the content by eliminating stop words and punctuation and changing it to lowercase for consistency.

5. Time Series Data

-

To record historical behavior, develop lag features or rolling averages.

-

To improve forecasts, incorporate seasonality, trends, or cumulative information.

6. Image Data

-

Extract significant patterns such as color histograms, edges, and forms.

-

Pre-trained CNN embeddings are used as model features in more sophisticated methods.

Comprehending the various forms of data is essential, as it fortifies models and guarantees precision throughout the data science process, hence enhancing outcomes.

Common Mistakes in Feature Engineering

Even skilled data scientists can make mistakes in feature engineering, which, if not handled carefully, can result in lower model performance, lost time, and deceptive outcomes.

-

Ignoring Data Quality: When raw or untidy data is used without adequate cleaning, errors, inconsistencies, or noise may be introduced, rendering engineered characteristics unreliable for training models.

-

Overloading Features Too Quickly: Instead of enhancing predicted accuracy, adding too many features at once might confuse the model, making it more complex and leading to overfitting.

-

Creating Redundant Features: Duplicate or highly correlated features increase computation and training time needlessly, offer little value, and may make the model less interpretable.

-

Using Future Information: Features derived from future occurrences cause data leakage, which gives models an unfair edge and causes real-world predictions to fail.

-

Neglecting Domain Knowledge: Model performance is decreased, significant real-world patterns are missed, and relevant features cannot be created if the context and meaning of the data are ignored.

-

Failing Feature Scaling: In numerical-sensitive algorithms, failing to scale or transform features with different ranges can skew the model, slow learning and generating erroneous predictions.

Best Practices in Feature Engineering in Data Science

A model can succeed or fail based on its feature engineering. In feature engineering in data science, adhering to best practices guarantees high-quality features, improved efficiency, and alignment with real-world issues.

-

Understand the Problem: Gain a thorough understanding of the business issue first. Understanding the objectives guarantees that the features are pertinent, significant, and consistent with the concepts of Feature Engineering in Data Science.

-

Explore Your Data: Examine, summarize, and visualize unprocessed data. The correlations, patterns, and outliers found here efficiently direct the generation and change of significant features.

-

Create Meaningful Features: Use domain expertise to combine, modify, or extract new features. Carefully crafted content reveals hidden patterns that models can use to improve forecasts.

-

Transform and Scale Features: When necessary, use mathematical transformations, encoding, or normalizing. Algorithms learn more quickly and function reliably across datasets when features are scaled appropriately.

-

Select Relevant Features: To retain just relevant features, utilize statistical tests, correlation analysis, or relevance metrics. This enhances the interpretability of the model and lowers noise.

-

Iterate and Validate: Test new features on a regular basis and monitor performance gains. Iteration guarantees that features change in tandem with models, preserving alignment with the objectives of Feature Engineering in Data Science.

A Simple End-to-End Example

1. Raw Dataset

Imagine you want to predict house prices. Your raw data might include:

-

House ID

-

Date the house was built

-

Size in square feet

-

Price

-

Last renovation year

At this stage, the data is raw and not very model-friendly.

2. Bad Features

If you use the raw data directly, some features don’t help the model:

-

House ID → meaningless for price prediction

-

Date Built → the model won’t understand it as “house age”

-

Neighborhood → text format that the model cannot interpret directly

The model struggles because the important patterns aren’t obvious.

3. Improved Engineered Features

After feature engineering, you can transform the data into meaningful features:

-

Calculate House Age = Current Year – Date Built

-

Calculate Years Since Renovation = Current Year – Last Renovation

-

Keep Size in square feet as is

-

Keep Owner Age as is

-

Encode Neighborhood numerically (e.g., A = 0, B = 1)

Now the model can clearly see patterns and relationships in the data.

4. Performance Improvement Conceptually

With engineered features:

-

Predictions are more accurate

-

Patterns like “older houses with recent renovations sell for more” become visible

-

Model trains faster and generalizes better to new data

Conceptually, the same dataset produces better results just by presenting information in a way the model understands.

Gaining proficiency in feature engineering in data science alters your perspective on data. Helping your data convey its story effectively is more important than simply using sophisticated models or algorithms. Even basic models can work wonders with well-considered features, and chaotic data can be transformed into insightful knowledge. You get closer to reliable results with each cleaning, transformation, or feature creation phase. Your models get stronger the more you experiment, practice, and comprehend your data. Spending time on features results in better choices, more intelligent solutions, and increased confidence in each project you take on.