Understanding Genetic Algorithm in Machine Learning

Discover how genetic algorithms enhance machine learning optimization, tackle complex problems, and give professionals a competitive advantage in AI solutions.

Imagine missing out on innovative solutions to complex machine learning problems. Many real-world challenges in machine learning do not have straightforward formulas or easy paths to resolution. These situations often involve numerous wrong turns, confusion, and an expansive search space. As a result, standard optimization techniques frequently conclude prematurely and struggle to find accurate solutions.

We need to think about a different approach when figuring out the ideal solution becomes too difficult or slow. Machine learning systems can let a variety of potential solutions compete rather than imposing a solution through mathematical rules. As time passes, stronger ones endure, while weaker ones gradually move away.

This concept gives rise to genetic algorithms. They gradually enhance solutions, drawing inspiration from the environment. Instead of directly determining the optimal solution, they investigate, modify, and produce superior outcomes in machine learning challenges over generations.

What Is a Genetic Algorithm?

A genetic algorithm is a problem-solving technique inspired by the processes of nature. It evaluates many options at the same time in an effort to identify better solutions. Instead of following fixed procedures, it gradually improves outcomes by determining which solutions are most effective.

In simple terms, the algorithm generates a wide range of potential responses and progressively refines them. It helps systems find good answers, especially when rules are unclear, acting as a machine learning algorithm. Over time, strong solutions persist, while weaker ones tend to fade away.

Core Components of a Genetic Algorithm

-

Chromosome Representation: Potential solutions to a problem are encoded as structured data, such characters or numbers.

-

Population: To effectively investigate several options simultaneously, a collection of several solutions is utilized.

-

Fitness Function: This function evaluates each solution's quality in relation to the objectives of the problem and the intended results.

-

Selection: In order to impart beneficial qualities to the following generation, parents select better-performing solutions.

-

Crossover: New solutions with shared strengths are created by combining elements of two parent solutions.

-

Mutation: To preserve variation and keep the algorithm from becoming stuck, tiny, arbitrary adjustments are made.

-

Termination Criteria: The algorithm terminates after a predetermined number of generations or when a solution is satisfactory.

The various elements work together to drive the evolution and enhancement of solutions over time. Each stage is essential for achieving better outcomes while staying focused on machine learning objectives. It is important to ensure that the algorithm concludes when meaningful and valuable answers have been discovered.

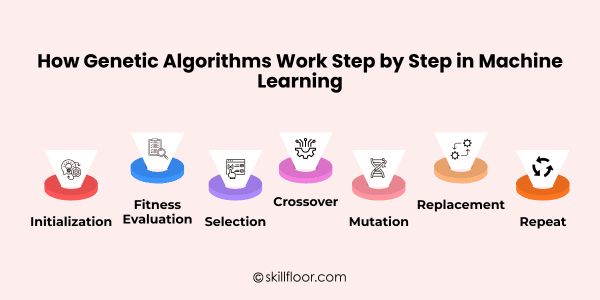

How Genetic Algorithms Work Step by Step in Machine Learning

Natural evolution serves as the model for optimization and search techniques known as genetic algorithms. They are frequently employed in machine learning to handle challenging issues by gradually refining solutions. Here is a detailed, step-by-step breakdown of how this procedure operates:

1. Initialization

Initialization is the first stage of a genetic algorithm, during which a random population of possible solutions is generated. The algorithm may investigate a wide range of options thanks to this varied starting point, which raises the likelihood of discovering solid answers.

2. Fitness Evaluation

A fitness function is used to evaluate each solution in the population during the Fitness Evaluation stage. By evaluating how effectively a solution satisfies the objectives of the challenge, this function aids in identifying the most promising options for the following generation.

3. Selection

The algorithm selects the top solutions from the existing population to serve as the parents of the following generation in the Selection step. Stronger solutions are more likely to be chosen, guaranteeing that beneficial qualities are inherited.

-

Roulette Wheel Selection: Stronger answers are given a higher probability while still giving weaker ones a chance because solutions are selected at random but weighted according to fitness.

-

Tournament Selection: The best answer is chosen as a parent for crossover after a few are chosen at random.

-

Rank Selection: Instead of using raw fitness scores, selection probability is determined by ranking the solutions according to their level of fitness.

4. Crossover

The Crossover phase creates new offspring by combining chosen parent solutions. By combining characteristics from both parents, this technique encourages diversity and enables the algorithm to discover more effective solutions by utilizing each parent's abilities.

-

Single-Point Crossover: Two new offspring solutions are produced by swapping segments of the parent chromosomes at a randomly selected place.

-

Two-Point Crossover: Offspring with mixed traits are produced by choosing two spots and exchanging the segment between them between the parents.

-

Uniform Crossover: To provide a balanced combination of the traits of both parents, each gene is independently selected with equal chance from either parent.

-

Arithmetic Crossover: For real-valued chromosomal representations, the weighted average of parent values is employed to construct new offspring.

5. Mutation

Some random modifications are added to the offspring solutions during the mutation process. In addition to preventing premature convergence and enabling the algorithm to explore new regions of the solution space for improved outcomes, this helps preserve population variety.

-

Bit-Flip Mutation: The chromosome undergoes minor random modifications when a binary gene is switched from 0 to 1 or 1 to 0.

-

Swap Mutation: By switching places, two genes in a chromosome allow for the exploration of various solution configurations without sacrificing preexisting features.

-

Random Resetting: The population is made more varied by substituting a randomly selected value within a gene's permitted range.

-

Gaussian Mutation: A gene is given a tiny random value drawn from a Gaussian distribution; this method is frequently applied to real-number chromosomes.

6. Replacement

In the Replacement stage, offspring and occasionally chosen parents are combined to create a new generation of solutions. This guarantees that the population changes over time, retaining the best answers while adding new variations to keep getting closer to the ideal solution.

7. Repeat

The selection, crossover, mutation, and replacement procedures are carried out over several generations during the Repeat stage. With each iteration, the population improves, and the algorithm gets closer to the optimal solution. This cycle is repeated until the method satisfies the stopping criterion.

Applications of Genetic Algorithms in Machine Learning

Genetic algorithms improve model accuracy, efficiency, and overall performance across complex datasets by optimizing features, hyperparameters, neural architectures, rules, clustering, and reinforcement policies.

1. Feature Selection

By choosing the most pertinent features, genetic algorithms increase model accuracy, decrease overfitting, and effectively expedite training.

2. Hyperparameter Optimization

In order to improve model performance without the need for manual trial-and-error tuning, GAs explore large search spaces and evolve ideal hyperparameters.

3. Neural Network Architecture Search

GAs automatically creates neural networks that perform better by optimizing network topologies, such as layers, neurons, and activation functions.

4. Rule Discovery and Symbolic Regression

In order to produce understandable insights, GAs balance model accuracy with simplicity by extracting mathematical expressions and interpretable rules from data.

5. Clustering and Classification Problems

By changing centroids, borders, or feature subsets, GAs enhance clustering and classification, increasing accuracy across complicated datasets

6. Reinforcement Learning Policy Optimization

GAs find ways to maximize long-term rewards in dynamic situations by optimizing policies in reinforcement learning.

Advantages of Genetic Algorithms

When traditional methods fail, genetic algorithms effectively evolve high-quality solutions. They provide robust optimization, global search capabilities, adaptability to complicated problems, and model-agnostic answers.

-

Global Optimization Capability

-

Flexibility Across Problem Types

-

Robustness to Complex Search Spaces

-

Parallelism and Efficiency

-

Ability to Escape Local Optima

-

Adaptability and Scalability

Limitations and Challenges

Genetic algorithms have drawbacks despite their advantages, such as sensitivity to parameter values, slower convergence, high processing cost, and no assurance of the best result.

-

High Computational Cost

-

Slow Convergence on Complex Problems

-

Parameter Sensitivity

-

Risk of Premature Convergence

-

Difficulty with Real-Time Applications

-

No Guaranteed Global Optimum

Genetic Algorithms vs Other Optimization Techniques

Despite their strength, genetic algorithms are not the sole machine learning optimization technique. This is how GAs stack up against other widely used methods:

1. Genetic Algorithms vs Gradient Descent

-

Although gradient descent is effective for differentiable functions, it has trouble with local minima and complex landscapes.

-

GAs is more adept at handling non-differentiable, discontinuous, or extremely rough search spaces because they are derivative-free.

2. Genetic Algorithms vs Simulated Annealing

-

While simulated annealing depends on cooling schedules and can become stuck in local optima, it investigates solutions probabilistically.

-

GAs increases the reliability of global searches by keeping a population and evolving several solutions at once.

3. Genetic Algorithms vs Particle Swarm Optimization (PSO)

-

For smooth search spaces, PSO converges rapidly; nevertheless, in complex problems, it runs the danger of premature convergence.

-

GAs maintain exploration while enhancing the quality of solutions by promoting variety through crossover and mutation.

4. Genetic Algorithms vs Grid/Random Search

-

While random search might overlook ideal regions, grid search thoroughly tests parameters, which is computationally costly.

-

Large, high-dimensional search spaces are intelligently explored by GAs, which quickly evolve toward the best answers.

Real-World Example of Genetic Algorithms in Machine Learning

1. Feature Selection in Healthcare

GA improves model accuracy while lowering computational complexity and training time by choosing the most pertinent patient features for diabetes prediction.

2. Hyperparameter Optimization

By optimizing neural network learning rates, neuron counts, and activation functions, GA improves performance without requiring a great deal of manual trial-and-error testing.

3. Neural Architecture Search

By autonomously adjusting network topologies, GA finds the best layer configurations and neuronal connections for tasks like speech recognition and picture classification.

4. Reinforcement Learning Policy Optimization

GA maximizes long-term benefits in complex, dynamic situations by optimizing algorithms for robotic path planning and dynamic simulations.

Common Misconceptions About Genetic Algorithms

Many people have a misconception about how genetic algorithms operate and frequently believe they are perfect or always find the best answer fast:

1. Always Finds Best

Even when utilized as a Genetic Algorithm in Machine Learning tool, Genetic Algorithms do not ensure achieving the ultimate best outcome, but they do enhance solutions over time.

2. More Generations Better

Increasing the number of generations is beneficial, but it may lead to a plateau in results, and too many generations waste computational resources in real-world applications of genetic algorithms in machine learning.

3. Replaces ML Models

They are used in conjunction with machine learning approaches and are optimization tools rather than models per se.

4. Mutation Is Essential

In order to successfully explore new solution possibilities, mutation is necessary since it preserves diversity and prevents premature convergence.

5. Selection Ensures Success

Crossover and mutation are equally important for producing varied, better offspring solutions, even though selection favors strong solutions.

6. Always Slow Algorithm

For some sorts of problems, Genetic Algorithms can be effective with small populations and proper parameter adjustment.

Future Scope of Genetic Algorithms

In particular, Genetic Algorithms in Machine Learning, where they improve search and optimization tasks, are still developing and offer potential answers to challenging optimization problems across sectors.

1. Hybrid Evolutionary Models

The effectiveness and accuracy of Genetic Algorithm in Machine Learning applications are increased by combining them with other approaches to produce more reliable results.

2. Integration with Deep Learning

They enhance performance in deep learning tasks and advanced Genetic Algorithm in Machine Learning workflows by optimizing neural network designs, weights, and hyperparameters.

By automating feature engineering, hyperparameter tuning, and model selection, GAs make machine learning more approachable and less dependent on expert input.

4. Evolutionary Strategies in Reinforcement Learning

In complex, dynamic contexts, reinforcement learning agents can function efficiently thanks to GAs' ability to adapt rules and methods.

5. Optimization in Robotics

For more effective autonomous systems, they optimize job scheduling, adaptive control, and robotic motion planning.

6. Industrial Process Improvements

GAs improves efficiency and overall results while cutting costs through resource allocation, manufacturing, and logistics optimization.

Exploring Genetic Algorithm in Machine Learning demonstrates how issues that conventional approaches find difficult to resolve can be resolved by using ideas from nature. We find more intelligent methods to enhance models, optimize features, and optimize neural networks by allowing solutions to change over time. Anyone dealing with data will find this technique interesting since it promotes experimentation, creativity, and learning from incremental improvement. When using a Genetic Algorithm in Machine Learning, you examine a wide range of options, adapt, and develop solutions that genuinely match difficult problems. Using this approach can improve the efficacy, creativity, and even enjoyment of machine learning initiatives.