Understanding the Core Components of Machine Learning

Discover the core components of Machine Learning—data, algorithms, models, training, deployment, and evaluation—explained in clear, simple, easy-to-understand words.

Why are modern technologies and services so intelligent at making suggestions, identifying suspicious activity, or assisting us in finding things fast? Machine learning, which uses examples rather than strict guidelines to teach systems, holds the key to the solution. It helps in transforming unprocessed data into actionable steps that people can trust.

Hospitals assist doctors in identifying trends, factories monitor machinery to prevent malfunctions, shops recommend products, and websites display news you might find interesting. We currently use these systems daily. Anyone who understands the fundamentals of machine learning can see how data is transformed into practical outcomes that help with actual tasks.

What is Machine Learning?

Machine learning enables computers to learn from examples rather than relying on predefined rules. It assists systems in identifying trends in data and making predictions or decisions. For instance, by analyzing your previous preferences and interests, a machine learning system can suggest movies that you might enjoy.

The basic concept is simple: if you provide the computer enough information, it will identify helpful connections that will direct subsequent outcomes. Every day, machine learning is used by banks, hospitals, shops, and even smartphone apps. These days, it's a popular tool that simplifies life by transforming data into more intelligent ideas and actions.

The Significance of Machine Learning in Modern Technology

-

Smarter Decision Making: Machine learning improves performance without constant human input, learns from historical data, and automatically adjusts to new circumstances, all of which help software and gadgets make better decisions.

-

Faster Data Analysis: Using quick analysis of huge volumes of data, it enables businesses to spot issues, trends, and patterns that people might overlook, improving the accuracy and efficiency of procedures.

-

Personalized Recommendations: In order to help people locate goods, videos, or other content that they are likely to enjoy, machine learning provides personalized recommendations in social networking, streaming, and shopping.

-

Healthcare Predictions: By properly analyzing patient data and medical histories, the Importance of Machine Learning in healthcare is shown in the ability to anticipate ailments, assist in diagnosis, and recommend therapies.

-

Industry Safety Improvements: By anticipating equipment failures, identifying anomalous behavior, and decreasing downtime, it enhances industry safety and maintenance, resulting in cost savings and fewer accidents.

-

Everyday Tool Support: Machine learning makes technology more responsive, useful, and dependable for consumers in their daily lives by supporting commonplace products like voice assistants, navigation apps, and fraud detection.

Exploring the Different Types of Machine Learning

1. Supervised Learning

A system that learns from examples with pre-given answers is said to be supervised. A model might examine emails marked as "spam" or "not spam," for example. It learns rules and makes predictions for new emails by observing a lot of examples. When a large amount of labeled data is available, it functions optimally.

2. Unsupervised Learning

Unsupervised learning is the process by which a system analyzes input that has no labels. It searches for unnoticed patterns or clusters. It might, for instance, unite clients with comparable purchasing patterns. Companies utilize it to learn about user behavior, identify organic clusters, or uncover insights in situations where no definitive solutions are given in advance.

3. Semi-Supervised Learning

Labeled and unlabeled data are both present in semi-supervised learning. A small sample of labeled data is mixed with larger unlabeled data sets in this strategy, which helps because labeled data can be challenging to gather. Compared to identifying everything by hand, it saves money and work while increasing accuracy.

4. Reinforcement Learning

Systems can be trained by trial and error in reinforcement learning. In response to rewards or punishments, the system acts, observes outcomes, and gains knowledge. A nice example would be teaching a program to play games or a robot to walk. It becomes improved over time by repeating actions and changing behavior to get better outcomes.

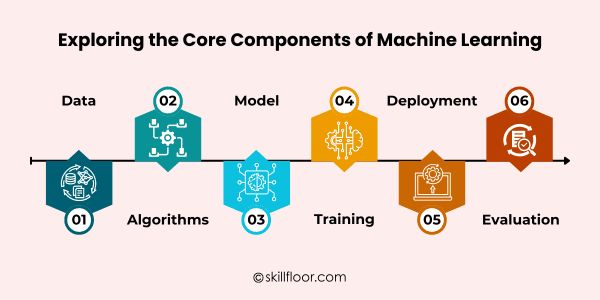

Exploring the Core Components of Machine Learning

1. Data

The basis of machine learning is data. It contains the data and illustrations that a system utilizes to identify trends and forecast outcomes. Good data is accurate, comprehensive, and clean.

-

Quality Matters: Proper learning is ensured by high-quality data. The system can better comprehend patterns and generate more dependable solutions in real-life activities when given clear, accurate, and consistent examples.

-

Quantity Helps Learning: A sufficient amount of data makes it easier for the algorithm to identify trends. While larger datasets enhance accuracy and performance, smaller datasets may restrict learning.

-

Types of Data: Data can take the form of sounds, images, text, or numbers. For the system to comprehend and employ each type effectively for learning tasks, it needs to be properly prepared.

2. Algorithms

The set of guidelines or procedures that a system uses to learn from data is called an algorithm. They support the system's pattern recognition and decision-making using the available data.

-

Learning Methods: The system's learning process is guided by algorithms. The effectiveness with which the system extracts valuable insights from the data depends on many techniques, such as rules-based or pattern-based approaches.

-

Choosing the Right Algorithm: The goal and type of data determine which algorithm is best. Knowing machine learning algorithms makes it easier to choose the optimum approach, increasing efficiency and accuracy; yet, making the wrong decision could have negative effects.

-

Types of Algorithms: There are several kinds of algorithms, including reinforcement, supervised, unsupervised, and semi-supervised ones. Depending on the data at hand and the learning objectives, each category has a distinct function.

3. Model

A model is what a system produces after employing an algorithm to learn from data. The system can accurately forecast or make decisions on fresh, unknown cases thanks to the patterns and knowledge it has learned.

-

Training Outcome: The training data teaches the model patterns. The algorithm and data quality have an impact on its performance. Learning outcomes are more accurate when data and algorithms are improved.

-

Testing the Model: Separate examples that were not utilized for training are used to verify the models. They are tested to make sure they don't only learn the training material by heart and can accurately forecast fresh data.

-

Improving Models: By changing parameters, adding more data, or experimenting with various algorithms, models can be improved. Frequent updates enable the model to make predictions with greater accuracy and adjust to shifting circumstances.

4. Training

The process by which a system analyzes data and modifies itself to carry out a task accurately is called training. Repeated learning aids the system in becoming more accurate and producing better predictions over time, much like honing a talent.

-

Learning from Data: The system analyzes examples, looks for trends, and modifies its rules throughout training. When the system comes across fresh or unexplored data, this procedure aids in its ability to make accurate predictions or judgments.

-

Avoiding Mistakes: The system won't overfit the data or learn inaccurate patterns if it is properly trained. This guarantees that it works effectively on fresh samples and yields trustworthy outcomes in practical settings.

-

Continuous Improvement: New or updated data can be used to repeat the training process. Frequent practice makes it possible for the system to maintain accuracy, adjust to changes, and gradually enhance predictions for improved overall performance.

5. Deployment

The step of deployment is when a learned model is applied to actual circumstances. The system can successfully assist users and give useful value by making forecasts, making recommendations, or supporting decisions.

-

Real-World Use: Deployment enables the system to operate on real-time data, assisting users in making more precise and timely decisions. It guarantees the model yields practical applications and useful outcomes in real-world scenarios.

-

Monitoring Performance: The system should be routinely checked to make sure it is operating properly after deployment. Incoming data errors or modifications might necessitate prompt notice and remedial measures.

-

Updating the System: Machine Learning Models require frequent updates with fresh information and adjustments. Regular updates ensure the system stays accurate, dependable, and flexible enough to adapt to changing patterns or real-world conditions.

6. Evaluation

The process of evaluating a trained model's performance is called evaluation. Prior to being applied in practical settings, it evaluates the model's accuracy, dependability, and usefulness to make sure it functions effectively and consistently.

-

Measuring Accuracy: Metrics and tests are used to determine whether a model's predictions correspond to the anticipated outcomes. Reliable performance on fresh, unknown data by accurate models instills confidence in practical applications and judgments.

-

Identifying Weaknesses: Errors, faults, or patterns that the model finds difficult to anticipate accurately are revealed by evaluation. When applied to real-world scenarios, this knowledge aids in directing enhancements, resolving problems, and avoiding subpar performance.

-

Improving Reliability: Retraining, fine-tuning, or adjusting the model might be done based on evaluation outcomes. As data or real-world circumstances evolve over time, forecasts are kept reliable and correct through ongoing evaluation.

How the Components of Machine Learning Work Together

-

Collecting Data: The first step in the process is collecting data, which gives the system the facts and illustrations it needs. The algorithm can learn the right patterns and generate insightful predictions if the data is accurate and of high quality.

-

Choosing Algorithms: Data-driven system learning is determined by algorithms. By selecting the appropriate algorithm, patterns are efficiently collected, assisting the system in making precise judgments and forecasts.

-

Building the Model: The algorithm learns from the data to create the model. It keeps track of patterns and rules, which enables the system to accurately and efficiently anticipate outcomes for new samples.

-

Training the System: During training, the model is continuously adjusted using data. Comprehending machine learning techniques at this point enhances accuracy, fortifies learning, and gets the system ready for practical uses.

-

Deployment in Action: The model is put to use on real-time data after it has been trained. It gives people useful information for daily work by making forecasts, recommendations, or decisions.

-

Evaluation and Feedback: Evaluation measures the model's performance. Feedback from testing or actual use aids in enhancing precision, correcting errors, and maintaining the system's dependability over time.

The Key Challenges Faced in Machine Learning

-

Poor Data Quality: Untidy or poor-quality data might cause a system to malfunction. Inaccurate, inconsistent, or missing data hinders models' capacity to identify patterns, which lowers the accuracy of forecasts in practical applications.

-

Insufficient Data: Learning is hampered by insufficient data. Models may perform poorly, fail to generalize, or produce erroneous predictions when given small datasets. To successfully capture true patterns, more instances are frequently required.

-

Overfitting: A model that learns the training data too thoroughly—memorizing samples rather than recognizing patterns—is said to be overfit. It does poorly on fresh, untested data but well on trained data.

-

Underfitting: When a model is too basic to identify patterns in the data, it is said to be underfitting. It misses significant correlations or trends and performs poorly on both training and fresh data.

-

Choosing the Right Algorithm: Choosing the incorrect algorithm for a task or dataset might result in decreased efficiency and accuracy. Success depends on knowing the problem and using the appropriate strategy.

-

Changing Real-World Conditions: Conditions that change, such as new client patterns or behaviors, might cause models to fail. Model accuracy and relevance require constant monitoring, updating, and retraining.

Emerging Trends in Machine Learning

Future developments in machine learning will influence how technology advances and adapts. Better forecasts, quicker learning, more intelligent systems, and more useful applications in a variety of global businesses are the main focuses of current developments.

-

Automated Machine Learning: Making models is made easier by automation. These days, tools make machine learning more accessible and cut down on development time by assisting users in choosing algorithms, fine-tuning settings, and preprocessing data.

-

Edge Computing: Rather than utilizing cloud servers, models are instead operating on gadgets such as smartphones and sensors. Decisions may be made more quickly and in real time, and latency is decreased and privacy is enhanced.

-

Explainable Models: Users can better comprehend why models produce predictions when transparency is prioritized. Transparent explanations enhance accountability, adoption, and trust in crucial applications.

-

Federated Learning: Local devices retain data, and models learn from information that is dispersed. Without disclosing private information, this method improves security and privacy.

-

TinyML: Machine learning is getting smaller so it can operate on low-power, tiny devices. It makes it possible for wearables, smart sensors, and Internet of Things devices to function effectively offline.

-

Self-Supervised Learning: Models generate their own training signals in order to learn from unlabeled data. This speeds up from large datasets and lessens the need for manual labeling.

Our daily lives now involve machine learning, which subtly improves the usefulness and intelligence of equipment. It touches on a wide range of topics, from suggesting videos and goods to assisting medical professionals in identifying patterns or maintaining the safety of machinery. Anyone can observe how raw information is transformed into actionable steps by comprehending the components of machine learning, which include data, algorithms, models, training, deployment, and evaluation. Notwithstanding obstacles like inadequate data or shifting circumstances, the discipline keeps expanding thanks to new techniques and more astute strategies. Understanding even the fundamentals paves the way for developing workable solutions that simplify life. Examining Machine Learning's Components demonstrates how technology and humans may collaborate to effectively and meaningfully tackle practical challenges.