Ultimate Guide to Data Wrangling in Data Science

Master data wrangling in data science with this ultimate guide. Learn and cleaning, transformation, tools, and strategies to build reliable, high-impact models.

Don't overlook the power that lies within your data. Businesses get vast amounts of raw data every second, including payment records, smart device data, and user clicks. However, incomplete information leads to misunderstandings. Data science transforms clean, well-organized data into understandable insights that enable teams to make decisions more quickly and effectively in the midst of this confusion.

This is where data wrangling in data science becomes essential.

According to Grand View Research, the data wrangling market was valued at USD 3.59 billion in 2024 and is expected to grow at a rate of 12.0% annually to reach USD 10.32 billion by 2033. This highlights the increasing importance of clean data for many companies.

Understanding data wrangling is what separates average analysts from highly effective data professionals, regardless of whether you're using Python, R, SQL, or even Microsoft Excel.

What is Data Wrangling?

The process of cleaning and preparing raw data for analysis is known as data wrangling. It transforms jumbled, unfinished, or disorganized data into an understandable, structured manner.

Rectifying missing values, eliminating duplicates, rectifying mistakes, and formatting data correctly are some of the duties that fall under this category. Making sure the data is correct, trustworthy, and prepared for reporting, analysis, or machine learning models is made easier using data wrangling.

It involves:

-

Cleaning incorrect or missing values

-

Transforming data formats

-

Integrating multiple data sources

-

Structuring data for modeling

Data Wrangling vs Data Cleaning vs Preprocessing

-

Data Cleaning → Fixing errors, missing values, duplicates

-

Data Wrangling → Cleaning + transforming + restructuring

-

Data Preprocessing → Preparing data specifically for machine learning

-

ETL (Extract, Transform, Load) → Data pipeline process in data engineering

Consider data wrangling as the more general term that creates structured insights from unstructured data, emphasizing the significance of data science plays in enabling sound decision-making.

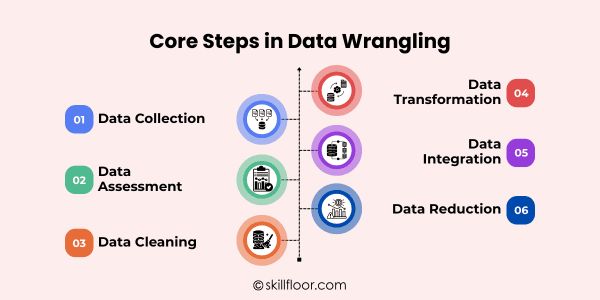

Core Steps in Data Wrangling (Step-by-Step Framework)

Collecting raw data, evaluating its structure and quality, clearing errors, converting formats, integrating sources, lowering noise, and validating datasets for precise analysis and modeling are all essential processes in data wrangling.

Step 1: Data Collection

Before cleaning and analysis, data collection ensures reliable, consistent datasets by collecting raw information from many sources. This forms the basis for data wrangling in data science.

-

Sources: CSV, Excel, APIs, Databases

-

Web scraping basics

-

Challenges in data ingestion

-

Data format compatibility

-

Storage considerations

Step 2: Data Assessment (Exploratory Phase)

Patterns, quality, and structure are assessed in data assessment. Prior to the start of systematic cleaning, nulls, anomalies, and inconsistencies are identified through data wrangling in data science.

-

Shape, structure, summary statistics

-

Checking nulls

-

Checking unique values

-

Identifying anomalies

-

Understanding distributions

Step 3: Data Cleaning

Data cleaning addresses errors and inconsistencies. Missing values, duplicates, incorrect data types, and inconsistent categorical labels must all be managed properly to enable effective data wrangling in data science.

-

Handling missing values

-

Removing duplicates

-

Fixing data types

-

Standardizing text formatting

-

Correcting categorical inconsistencies

Step 4: Data Transformation

Clean data is transformed into organized formats through data transformation. Model performance and analytical readiness are enhanced by scaling, encoding, and binning under Data Wrangling in Data Science.

-

Normalization vs Standardization

-

Encoding categorical variables

-

Feature scaling

-

Log transformation

-

Date-time transformations

Step 5: Data Integration

Multiple datasets are combined into a single view using data integration, which guarantees correct joins free of duplication or data loss, consistent keys, and aligned schemas.

-

Merging datasets

-

Joining tables

-

Concatenation

-

Handling key mismatches

-

Schema alignment

Step 6: Data Reduction

Data reduction makes datasets more efficient and clearer by eliminating superfluous characteristics and using dimensionality reduction methods like Principal Component Analysis.

-

Removing irrelevant features

-

Feature selection

-

Dimensionality reduction

-

Reducing multicollinearity

-

Improving efficiency

Tools & Libraries for Data Wrangling

Data scientists and analysts can increase productivity and streamline processes by effectively cleaning, converting, and structuring datasets with the help of data wrangling tools and libraries.

1. Python Ecosystem

-

Pandas

-

NumPy

-

Dask

-

Polars

-

Vaex

2. R Ecosystem

-

dplyr

-

tidyr

-

data.table

-

readr

-

stringr

3. Big Data Tools

-

Apache Spark

-

Hadoop

-

Apache Flink

-

Apache Hive

-

Apache Kafka

4. No-Code / Low-Code Tools

-

Microsoft Power Query

-

OpenRefine

-

Alteryx Designer

-

Talend Data Preparation

-

Trifacta Wrangler

Best Practices for Effective Data Wrangling in Data Science

To become efficient and professional, follow these six practical best practices:

1. Keep Raw Data Untouched

The original dataset should always be kept safe and unaltered. If something goes wrong during cleaning, you can go back to the raw form by working on a different copy.

Protecting your raw data increases process trust. Additionally, it makes your work more transparent and easier to review later by allowing you to compare before and after results.

2. Make Work Reproducible

Steer clear of manually editing spreadsheets that aren't trackable. To make the same procedure repeatable at any time, use scripts and record your steps.

Reproducible work minimizes errors and saves time. Because others can comprehend and follow the same procedures without difficulty, it also facilitates teamwork.

3. Validate After Each Step

Always double-check the dataset after filtering, combining, or altering it. Verify that nothing unexpected has changed by going over row counts, column names, and basic summaries.

Minor validation checks avert major mistakes later. Before proceeding with analysis or modeling, this practice guarantees that your dataset remains correct and dependable.

4. Document Transformations

Write concise notes outlining the modifications you made and their justifications. Your reasoning and choices can be better understood by others if you provide brief remarks in your script.

Proper documentation facilitates seamless data preparation and eases modifications in the future. When returning to tasks after weeks or months, it also saves time.

5. Automate Repetitive Tasks

If the cleaning phase is something you do frequently, write a function or reusable script. Automating tasks lowers the amount of manual labor and increases project uniformity.

This method helps preserve quality while boosting efficiency. Workflows that are automated and clean also help prepare data for the subsequent construction of precise data science models.

6. Understand the Data Context

Recognize the meaning of each column and its collection method prior to cleaning. Being aware of the background helps you prevent inadvertently leaving out crucial details.

You make wiser and more significant decisions when you comprehend the context. It makes sure your data accurately represents business requirements and real-world scenarios.

Common Data Wrangling Mistakes Beginners Should Avoid

1. Blindly Dropping Missing Values

Eliminating missing values without considering the consequences can lead to bias, the loss of crucial information, and distorted analytical results, particularly when the missing data exhibits significant patterns.

2. Ignoring Data Leakage

When data from the test dataset is used for cleaning or preparation, it may result in inaccurate model performance and subpar deployment outcomes.

3. Not Checking Assumptions

During analysis, improper transformations, false conclusions, and untrustworthy insights may result from neglecting to examine data distributions, ranges, and logical restrictions.

4. Over-Normalizing Data

Overuse of scaling or normalization can eliminate valuable variance, making interpretation more difficult and occasionally decreasing rather than increasing the efficacy of the model.

5. Hardcoding Transformations

Writing fixed values directly into code decreases flexibility, makes updates challenging, and increases errors when datasets change or new categories are added, underlining the prerequisites of appropriate dynamic design.

6. Skipping Data Validation

Inaccurate analysis and poor decision-making might result from failing to validate findings after cleaning or combining datasets.

Real-World Applications of Data Wrangling

Transforming unstructured data into useful insights is essential for decision-making, predictive modeling, and effective operations in a variety of sectors and analytics initiatives.

1. Healthcare Analytics

Creates data for accurately forecasting illness trends and enhancing treatment results by cleaning patient records and integrating data from various sources.

2. Financial Services

Highlights the significance of data science for finance by preparing transactional and market data for risk assessment, fraud detection, and investment opportunity forecasts.

3. Marketing & Sales

Converts sales data and consumer behavior to improve marketing, target markets, and boost conversion rates.

4. E-commerce & Retail

Purifies transaction, inventory, and customer feedback data to enhance operations effectiveness, stock management, and recommendation systems.

5. IoT & Sensor Data

Handles real-time processing of large streaming data from devices for smart automation, predictive maintenance, and monitoring.

6. Government & Public Sector

Efficiently prepares administrative, demographic, and census data for resource allocation, urban planning, and policymaking.

Advanced Concepts in Data Wrangling

Complex datasets must be cleaned, transformed, and prepared using advanced data wrangling techniques to ensure accuracy, efficiency, and preparedness for machine learning or advanced analytics applications.

1. Handling Imbalanced Datasets

To balance class distributions and enhance model performance for predictive analytics, use strategies like oversampling, under sampling, or synthetic data generation.

2. Time-Series Data Processing

For precise forecasting, time-series data must be cleaned, resampled, and transformed to account for seasonality, irregular intervals, and missing timestamps.

3. Text Data Wrangling

To be ready for natural language processing tasks, preprocess textual input using vectorization, stemming, stop word removal, and tokenization.

4. Outlier Detection and Treatment

To avoid biased machine learning models or skewed analysis, identify and manage outliers using statistical techniques or subject-matter expertise.

5. Pipeline Automation

To effectively handle recurring cleaning and transformation chores across several datasets or projects, create reusable, automated data pipelines.

6. Data Validation Frameworks

Prior to analysis or modeling, make sure datasets fulfil accuracy, consistency, and completeness standards by putting validation procedures and quality checks into place.

The Future of Data Wrangling

Automation, AI integration, and sophisticated frameworks are key components of the future of data wrangling, which will influence how analysts and data scientists efficiently approach data wrangling in data science.

1. Automation & AI-Assisted Cleaning

Reduce manual intervention in data wrangling and increase productivity by using AI technologies to automatically discover mistakes, clean data, and transform datasets.

2. Data-Centric AI

Concentrate on enhancing the structure, labeling, and quality of the data to produce more dependable machine learning models and superior analytical insights.

3. Data Quality Engineering Roles

By connecting data engineering and analytics teams, specialized roles are created to guarantee data accuracy, completeness, and dependability across pipelines.

4. Growth of ELT vs ETL Pipelines

With the move to ELT pipelines, data warehouses can handle massive volumes of data and support contemporary analytics and machine learning.

5. Integration of Streaming Data

Complex pipelines are needed to handle data in real-time from social media, sensors, and the Internet of Things so that analysis and decisions may be made quickly.

6. Advanced Data Governance

To ensure that datasets are reliable, safe, and consistent across organizational systems, put policies, standards, and monitoring tools into place.

Great analysts are distinguished by their ability to transform unstructured data into insightful understandings. Data Wrangling in Data Science enables teams to effectively clean, organize, and prepare data, speeding up analysis and modeling. Anyone may confidently work with complex datasets if they adhere to best practices, use the appropriate tools, and steer clear of typical blunders. Examples and applications from the real world demonstrate the influence that appropriate data handling can have on many businesses. Using automation, sophisticated methods, and validation guarantees that your data will remain accurate. In the end, effective Data Wrangling in data science enables better judgments and daily results. Data wrangling in Data Science can become an essential and natural part of your workflow with constant effort.