Learn the Fundamentals of Deep Learning

Limited time opportunity to learn the fundamentals of deep learning. Gain essential skills now before the chance slips away

How does your phone unlock the screen by recognizing your face or how do streaming platforms recommend shows that seem to be customized to your preferences? Deep learning is a ground-breaking technology that has transformed industries and our lives, enabling these common surprises. I first became interested in deep learning while working on a project that involved analyzing medical photographs. Identifying minute irregularities in MRI scans was difficult with conventional techniques. When I used deep learning, the results were astounding—it produced highly accurate predictions and found patterns that human experts were unable to see.

By 2025, the global artificial intelligence (AI) market is expected to rise to a value of around $243.7 billion, with deep learning being a key factor in this expansion.

A subset of artificial intelligence (AI), deep learning is predicted to increase at a compound annual growth rate (CAGR) of more than 33.5% between 2023 and 2030 due to improvements in data centre capabilities, high processing power, and its capacity to carry out tasks without the need for human input.

This significant expansion highlights the significance of deep learning beyond its status as a popular Term.

However, why is deep learning so powerful? What's the point of spending time learning it? The article dives thoroughly into the principles of deep learning, explaining its ideas, uses, resources, and difficulties while offering a path to becoming proficient with this game-changing technology.

What is Deep Learning?

A branch of machine learning called "deep learning" follows how people learn from data. It processes enormous volumes of data, identifies patterns, and makes choices using artificial neural networks that are modelled after the composition and operations of the human brain. These networks are made up of layers of connected nodes, or "neurons," which gradually transform unprocessed data into information that has meaning.

A Simple Example

Consider teaching a child about the differences between dogs and cats. You would show them images and describe the variations, highlighting characteristics such as tail length, ear shape, or fur kind. Similar tasks are carried out by neural networks in deep learning, however, these features are automatically learned from the input without the need for explicit instructions.

How It Works

Several layers of neurons are necessary for deep learning:

-

Input Layer: Raw data, like an image or a text string, is accepted by the input layer.

-

Hidden Layers: Use weighted connections to process the data and identify features and patterns.

-

Output Layer: Predictions are made by the output layer, which can identify if an image is of a "cat" or "dog."

Deep learning can solve complicated issues like speech synthesis, language translation, and image identification because of its layered method.

Why Learn the Fundamentals of Deep Learning?

It is important to understand the fundamentals of deep learning for several reasons:

Flexible Applications:

-

Healthcare: Predicting patient outcomes and identifying illnesses from medical imagery.

-

Finance: Recognizing fraudulent activity and refining investment plans.

-

Retail: Providing the backbone for recommendation engines that enable customized purchasing experiences.

Great Career Opportunities: Deep learning experts are in great demand, and positions such as machine learning professionals, data scientists, and AI engineers pay well.

Creativity and Solving Issues: Deep learning makes it possible to solve problems that are thought to be intractable. For example, it helps farmers make data-driven decisions by using drone imagery to evaluate crop health.

Effects on Various Industries: Deep learning is transforming how companies and organizations function, from climate modelling to self-driving automobiles.

Having an understanding of these principles opens the way for creating significant solutions and influencing the future.

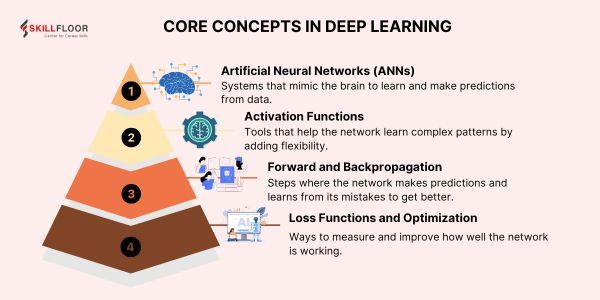

Core Concepts in Deep Learning

You must understand the essential elements of deep learning to understand its basis:

1. Artificial Neural Networks (ANNs)

The basis of deep learning is artificial neural networks. They include:

Input Layer: Raw data enters the network through the input layer.

Hidden Layers: Data processing intermediate layers that use networked nodes.

Output Layer: The output layer generates the finished product, like an estimate or grouping.

Every node-to-node link has a weight that establishes its significance. The network modifies these weights throughout training to reduce mistakes.

2. Activation Functions

Each neuron's output is determined by its activation function. Typical kinds include of:

ReLU (Rectified Linear Unit): The Rectified Linear Unit, or ReLU, introduces non-linearity by producing zero for negative inputs and the input itself for positive values.

Sigmoid: Perfect for probability-based estimates, it maps outputs to a range of 0 to 1.

Softmax: For multi-class classification, it transforms outputs into probabilities.

3. Forward and Backpropagation

Forward Propagation: Predictions are based on current weights as data moves through the network.

Backpropagation: To improve the accuracy of the model, errors are calculated using a loss function and propagated backwards to modify the weights.

4. Loss Functions and Optimization

Loss Functions: Calculate the deviation between the actual values and the predictions of a model. Cross-entropy loss for classification and mean squared error (MSE) for regression are two examples.

Algorithms for optimization: Methods such as Adam optimizer and randomly generated gradient descent (SGD) reduce the loss function to ensure effective learning.

How Deep Learning Differs from Machine Learning

While deep learning is a subset of machine learning, there are some key differences between the two, such as how they handle feature engineering, complexity, and applications. A thorough discussion of these differences may be found below:

|

Aspect |

Machine Learning |

Deep Learning |

|

Definition |

A type of AI where computers learn from data using simpler models. |

A part of machine learning that uses complex models is called neural networks. |

|

Feature Handling |

Humans manually choose what data (features) the model should use. |

The model automatically finds useful features from the raw data. |

|

Data Type |

Works best with structured data like spreadsheets or databases. |

Works well with unstructured data like images, text, and audio. |

|

Model Complexity |

Uses simple models like decision trees or linear regression. |

Uses very complex models like deep neural networks. |

|

Data Needs |

Can work with small datasets if features are well-prepared. |

Needs large amounts of data to perform well. |

|

Applications |

Used for things like predicting sales, detecting fraud, or customer segmentation. |

Used for tasks like recognizing faces, translating languages, and detecting objects. |

|

Training Time |

Trains quickly on normal computers. |

Takes longer to train and often needs powerful hardware like GPUs. |

|

Performance |

Works well for simpler problems with clear patterns. |

Excels at complex problems with lots of data. |

|

Interpretability |

Easier to understand how the model makes decisions. |

Harder to understand how the model works ("black box"). |

|

Scalability |

May not improve much with very large datasets. |

Gets better as you give it more data and computing power. |

|

Example: House Prices |

Requires manually preparing features like number of bedrooms or lot size. |

Can learn directly from raw data like photos of houses or full datasets. |

Tools and Frameworks for Deep Learning

To effectively develop, train, and implement models, deep learning depends on specific frameworks and tools. These frameworks manage enormous datasets, streamline training on modern technologies such as GPUs, and simplify complicated mathematical calculations. The most popular deep-learning tools are listed below:

1. TensorFlow

Overview:

-

TensorFlow is an open-source deep learning framework created by Google that can be used for both research and production.

-

From training models on large datasets to providing trained models across several platforms (mobile, web, servers), it can handle a wide range of activities.

Key features:

-

Flexibility: Offers high-level APIs like Keras for quick prototyping as well as low-level control for creating custom models.

-

Scalability: Designed to enable cloud-based platforms and provide distributed training for large-scale deployments.

-

Versatile Ecosystem: Consists of technologies such as TensorFlow Serving for production use, TensorFlow Lite for mobile deployment, and TensorBoard for visualization.

Use cases include time-series forecasting, image recognition, and natural language processing (NLP), among others.

Benefits include thorough documentation and strong community support. designed with production environments in mind.

2. PyTorch

Overview:

-

Another well-liked open-source deep learning framework, PyTorch was created by Facebook and is especially loved by researchers because to its simplicity of use.

-

renowned for its dynamic computing network, which allows runtime modifications to the model's structure.

Key features:

-

Dynamic Computation Graphs: Provides the ability to change models dynamically, which facilitates experimentation and debugging.

-

Integrating Python: resembles NumPy in syntax and feels intuitive to Python developers.

-

Strong GPU Acceleration: Easily facilitates the use of GPUs for quicker calculations.

-

TorchScript: Compiles PyTorch code for use on several platforms, facilitating the transfer of models from research to production.

Use cases include generative models (like GANs), reinforcement learning, and research and experimentation.

Advantages:

Common by researchers and academics.

Strong ecosystem featuring libraries such as torch audio for audio workloads and torch-vision for visual applications.

3. Keras

Overview:

-

Based on TensorFlow, Keras is a high-level deep-learning API. It aims to help neural network creation and prototyping at a rapid pace.

-

Beginners may use it due to its user-friendly design, yet it still has adequate power for more complex use cases.

Key features:

-

Simple and Intuitive API: An easy-to-use and intuitive API Beginners will find it easy to use due to its clear and modular code structure.

-

Seamless Integration of TensorFlow: TensorFlow is fully integrated for improved deployment and performance.

-

Pre-trained Models: Offers a collection of models that have already been trained for common tasks such as object detection and picture categorization.

-

Custom Layers: Enables more experienced users to specify their layers and features.

Use cases include simpler deep learning applications, learning goals, and quick prototyping.

Applications of Deep Learning

Numerous industries have been transformed by deep learning's adaptability:

1. Healthcare

Medical Imaging: Models analyze MRIs, CT scans, and X-rays to accurately identify conditions like cancer.

Drug Discovery: By predicting protein structures, DeepMind's AlphaFold speeds up the creation of novel therapies.

2. Finance

Fraud Detection: Algorithms protect users and institutions by instantly identifying questionable transactions.

Algorithmic Trading: Using historical data, predictive models maximize investing choices.

3. Entertainment

Recommendation Systems: Websites such as Spotify and Netflix use user preferences to make content recommendations.

Content Creation: Realistic photos, films, and music are created by generative models.

4. Autonomous Vehicles

Self-driving automobiles that use deep learning can: Recognize pedestrians and things. Make decisions in real time for safety and navigation.

Techniques in Deep Learning

1. CNNs, or convolutional neural networks

Convolutional neural networks, or CNNs, are made to analyze data that looks like images and grids. They recognize complex characteristics like objects by automatically identifying patterns like edges and forms. CNNs lower computing demands while preserving important data by using shared weights and pooling layers. Applications such as driverless cars, medical imaging, and image recognition make extensive use of them.

2. RNNs, or recurrent neural networks

Time series, text, or audio can be processed by Recurrent Neural Networks (RNNs), which are specialized for sequential data and maintain recollection of prior inputs. Long-term dependencies can be captured by variants like LSTMs and GRUs, which address issues like the vanishing gradient problem. They are employed in predicting, language translation, and speech recognition activities.

3. Transfer Learning

Transfer learning reduces time and money by using previously learned models for new, related tasks. For particular applications, the learnt characteristics of a pre-trained model are refined using a smaller dataset. This method lowers the chance of overfitting and is particularly helpful for tasks with little data, such as medical imaging or natural language processing.

4. GANs, or Generative Adversarial Networks

A discriminator and a generator compete to produce realistic data in Generative Adversarial Networks (GANs). To improve both, the discriminator assesses the reliability of the generated data while the generator creates synthetic data. Applications for GANs include data augmentation, deepfakes, and image creation.

Challenges in Deep Learning

Deep learning has limitations despite its power:

-

Data Requirements: Training frequently requires large datasets.

-

Computational Costs: Large amounts of resources, like GPUs or cloud infrastructure, are needed for model training.

-

Overfitting: Regularization techniques are necessary because models may perform well on training data but badly on unseen data.

Designing effective approaches requires an understanding of these challenges.

Learning Path for Beginners

-

Learn Python: With an extensive collection of libraries and tools, Python is the main language for artificial intelligence and deep learning. Start by learning the basics of syntax, loops, and libraries like pandas and NumPy.

-

Learn the basics of math: Develop a solid foundation in probability, calculus, and linear algebra since these topics are essential to neural networks and optimization methods.

-

Examine neural networks: Learn about the principles of backpropagation, gradient descent, layers, and activation functions in neural networks.

-

Practice Projects: Build simple tasks, like image or digit classifiers, to put your knowledge to use and hone your problem-solving abilities.

-

Join in Communities: Participate in hackathons, study groups, or online forums to network, exchange ideas, and pick the brains of seasoned professionals.

Deep learning is a game-changing technology that can solve challenging issues and have a significant influence. You can create and prosper in a tech-driven world by understanding the fundamentals of deep learning. Deep learning provides many opportunities to investigate, learn, and create solutions that influence the future, no matter your level of experience.

Are you ready to go out on this exciting journey? Start learning now, step by step, layer by layer. We look forward to your contributions.