Top Machine Learning Engineer Interview Questions and Answers

Prepare for your 2025 machine learning engineer interview with this comprehensive guide featuring 75+ detailed questions and answers for all levels—freshers to experienced pro

Machine learning (ML) continues to be one of the most dynamic and sought-after domains in the tech industry. As of 2025, the global machine learning market is projected to surpass $205 billion, with companies across industries—from healthcare to finance to e-commerce—hiring skilled ML engineers to build intelligent systems.

According to recent LinkedIn data, ML engineer roles have grown 44% year over year, making it one of the fastest-growing jobs globally. Whether you are a fresher, an experienced engineer, or someone transitioning from software development or data science, preparing for ML engineer interviews is essential to land top positions at companies like Google, Amazon, Meta, Microsoft, and innovative startups.

Basic Machine Learning Interview Questions and Answers

Q1. What is Machine Learning?

Machine Learning is a subset of Artificial Intelligence that allows systems to automatically learn and improve from experience without being explicitly programmed. It focuses on the development of algorithms that can access data and use it to learn for themselves. ML powers various real-world applications like recommendation engines, image recognition, fraud detection, and natural language processing.

2. What are the types of Machine Learning?

There are mainly three types:

-

Supervised Learning: The model learns from labeled data (e.g., spam detection).

-

Unsupervised Learning: The model identifies patterns from unlabeled data (e.g., clustering customers).

-

Reinforcement Learning: The model learns by interacting with an environment, receiving rewards or penalties (e.g., game AI).

3. How is Machine Learning different from Traditional Programming?

In traditional programming, you input data and rules to get an output. In ML, you input data and output, and the system learns the rules. ML focuses on pattern recognition, scalability, and adaptability, unlike static rule-based systems.

4. What is Overfitting in ML?

Overfitting happens when a model learns not only the underlying pattern in training data but also the noise. This makes the model perform well on training data but poorly on new, unseen data. Techniques to reduce overfitting include:

-

Cross-validation

-

Pruning (in decision trees)

-

Regularization (L1/L2)

-

Simplifying the model

-

Getting more training data

5. What is Underfitting?

Underfitting occurs when the model is too simple to capture the underlying structure of the data. It performs poorly on both training and testing data. Solutions include:

-

Increasing model complexity

-

Reducing regularization

-

Using a different algorithm

6. Explain the Bias-Variance Trade-off.

-

Bias is the error due to overly simplistic assumptions in the model.

-

Variance is the error due to too much complexity and sensitivity to training data. The goal is to find a sweet spot where both bias and variance are balanced, achieving good performance on both training and unseen data.

7. What is a Confusion Matrix?

A confusion matrix summarizes the performance of a classification model:

-

TP (True Positives): Correctly predicted positive values

-

TN (True Negatives): Correctly predicted negative values

-

FP (False Positives): Incorrectly predicted as positive

-

FN (False Negatives): Incorrectly predicted as negative From this, we derive metrics like accuracy, precision, recall, and F1-score.

8. What are Precision, Recall, and F1-score?

-

Precision = TP / (TP + FP): How many selected items are relevant?

-

Recall = TP / (TP + FN): How many relevant items are selected?

-

F1 Score = 2 * (Precision * Recall) / (Precision + Recall): Harmonic mean of precision and recall. Useful in imbalanced datasets.

9. What is Cross-Validation?

Cross-validation is a technique for assessing how well your model will generalize. In K-fold cross-validation, the data is split into K subsets, and the model is trained on K-1 folds while the remaining fold is used for testing. This process is repeated K times.

10. What is the Difference Between Classification and Regression?

-

Classification predicts categories (e.g., cat or dog).

-

Regression predicts continuous values (e.g., house price). Both are types of supervised learning, but serve different purposes.

11. What is Feature Engineering?

Feature engineering is the process of using domain knowledge to extract features from raw data to improve model performance. It includes:

-

Binning

-

One-hot encoding

-

Feature scaling

-

Feature extraction (e.g., PCA)

12. Explain the Curse of Dimensionality.

As the number of features grows, the data becomes sparse, and the distance between data points becomes less meaningful. It leads to performance degradation in models, particularly those relying on distance metrics. Dimensionality reduction helps address this.

13. What is Normalization vs. Standardization?

-

Normalization scales features to a [0,1] range.

-

Standardization scales data to have a mean of 0 and standard deviation of 1. Both are used to ensure that features contribute equally to distance-based models like k-NN or gradient descent-based models.

14. What is a Learning Rate?

Learning rate is a hyperparameter that controls the step size in gradient descent. A high learning rate may overshoot the minimum, while a low rate results in slow convergence. Adaptive optimizers like Adam adjust the learning rate dynamically.

15. What is a Cost Function?

A cost function quantifies the difference between predicted and actual values. The goal of training is to minimize this cost. Common cost functions include:

-

Mean Squared Error (MSE) for regression

-

Cross-Entropy Loss for classification

Intermediate Machine Learning Interview Questions and Answers

1. What is feature selection, and why is it important?

Feature selection is the process of selecting the most relevant features from your dataset to train your model. It improves model performance by reducing overfitting, training time, and complexity. Common techniques include Recursive Feature Elimination (RFE), LASSO regularization, and mutual information.

2. Explain the difference between bagging and boosting.

Bagging (Bootstrap Aggregating) creates multiple models using different subsets of data and aggregates their results to improve accuracy and reduce variance (e.g., Random Forest). Boosting, on the other hand, builds models sequentially, with each one trying to fix the errors of the previous one (e.g., XGBoost, AdaBoost), effectively reducing bias.

3. What is dimensionality reduction? Name a few techniques.

Dimensionality reduction is the process of reducing the number of features in a dataset while retaining important information. It improves computation speed and model interpretability. Techniques include Principal Component Analysis (PCA), t-SNE, and Linear Discriminant Analysis (LDA).

4. What is the role of regularization in ML?

Regularization techniques like L1 (Lasso) and L2 (Ridge) add penalties to the loss function to prevent overfitting by discouraging complex models. L1 tends to produce sparse models by shrinking some coefficients to zero, while L2 distributes weights more evenly.

5. Describe the working of a Support Vector Machine (SVM).

SVMs are supervised learning models that find the optimal hyperplane to separate data points of different classes. In non-linear cases, they use kernel tricks (e.g., RBF, polynomial) to transform input space into higher dimensions where a linear separator can be applied.

6. How does a decision tree split the data?

A decision tree splits data based on features that result in the highest information gain or lowest Gini impurity. It creates branches until reaching leaf nodes that represent predictions. Pruning is often applied to avoid overfitting.

What is the ROC curve and AUC score?

The ROC (Receiver Operating Characteristic) curve is a plot of True Positive Rate (Recall) against False Positive Rate. AUC (Area Under Curve) represents the model’s ability to distinguish between classes. AUC = 1 is perfect; 0.5 means no better than random.

8. What are hyperparameters, and how do you tune them?

Hyperparameters are settings outside the model learned from training data (e.g., learning rate, number of trees, depth). Tuning methods include grid search, random search, and Bayesian optimization. Proper tuning helps find the best model configuration.

9. What is the difference between parametric and non-parametric models?

-

Parametric models assume a fixed number of parameters (e.g., linear regression).

-

Non-parametric models make fewer assumptions and can grow in complexity with data (e.g., k-NN, decision trees).

10. Explain the working of k-Nearest Neighbors (k-NN).

k-NN is a lazy learner that classifies data based on the majority label of the 'k' closest training samples. It’s non-parametric and sensitive to the choice of 'k' and distance metrics (Euclidean, Manhattan).

11. What is the vanishing gradient problem?

This problem occurs in deep neural networks when gradients become too small during backpropagation, causing earlier layers to learn very slowly. It often affects sigmoid or tanh activation functions. Solutions include using ReLU activations and batch normalization.

12. Explain entropy and information gain in decision trees.

Entropy measures the disorder or impurity in a dataset. Information gain calculates the reduction in entropy from a dataset after splitting on an attribute. Trees use these metrics to decide the best feature to split on.

13. How do ensemble models improve predictions?

Ensemble models combine predictions from multiple models to improve accuracy and robustness. Techniques include:

-

Bagging: Random Forest

-

Boosting: XGBoost, LightGBM

-

Stacking: Blending outputs from several base models using a meta-model

14. How does a Naive Bayes classifier work?

It applies Bayes’ Theorem, assuming feature independence. Despite its simplicity, it performs well on text classification problems. It computes the posterior probability for each class and assigns the class with the highest probability.

15. What is model drift, and how do you handle it?

Model drift occurs when the statistical properties of the input data change over time, causing model performance degradation. Handling it includes regular model retraining, monitoring, and implementing drift detection techniques like population stability index (PSI).

Advanced Machine Learning Interview Questions and Answers

1. What is the difference between generative and discriminative models?

Generative models learn the joint probability distribution P(x, y) and can generate new data points (e.g., Naive Bayes, GANs). Discriminative models learn the conditional probability P(y|x) and focus on classification tasks (e.g., logistic regression, SVM). Generative models are used for tasks like data generation, while discriminative models are better for predictive accuracy.

2. How does the Expectation-Maximization (EM) algorithm work?

EM is used to estimate parameters in probabilistic models with hidden variables. It iteratively applies:

-

Expectation (E) step: Calculate the expected value of the log-likelihood.

-

Maximization (M) step: Maximize this expected log-likelihood to update parameters. Common applications include Gaussian Mixture Models and Hidden Markov Models.

3. What are Gaussian Mixture Models (GMM)?

GMMs model data as a mixture of several Gaussian distributions. Each component is defined by its mean and variance. EM algorithm is used for training. GMMs are powerful for clustering when data has a probabilistic structure.

4. Explain the concept of Markov Chains and their relevance in ML.

A Markov Chain is a stochastic model where the next state depends only on the current state. They are foundational for models like Hidden Markov Models (HMMs), often used in time series prediction, speech recognition, and natural language processing.

5. What is Reinforcement Learning and how is it different from Supervised Learning?

Reinforcement Learning (RL) involves an agent interacting with an environment to maximize cumulative reward. It learns via trial and error, receiving feedback in the form of rewards or penalties. Unlike supervised learning, RL doesn’t require labeled input/output pairs.

6. What is Q-Learning in Reinforcement Learning?

Q-Learning is a model-free RL algorithm that learns the value of actions in states to derive an optimal policy. It updates Q-values using the Bellman equation: Q(s, a) = Q(s, a) + α [r + γ max Q(s', a') - Q(s, a)] Where:

-

α = learning rate

-

γ = discount factor

-

r = reward

7. What is the vanishing/exploding gradient problem?

This occurs in deep networks during backpropagation when gradients become too small (vanishing) or too large (exploding), especially in RNNs. Solutions include:

-

Using ReLU activations

-

Batch normalization

-

Gradient clipping

-

LSTM/GRU for sequential data

8. What are Attention Mechanisms in Deep Learning?

Attention mechanisms help models focus on specific parts of input sequences. Popularized by Transformer models (e.g., BERT, GPT), attention weights represent the importance of different input tokens, improving performance in NLP tasks.

9. What is the architecture of a Transformer model?

Transformers use an encoder-decoder architecture, with components like multi-head self-attention, layer normalization, and position-wise feed-forward networks. They do not rely on recurrence or convolution and are efficient in handling long sequences.

10. What is transfer learning, and how is it used?

Transfer learning involves using a pre-trained model on one task and fine-tuning it for another related task. It is useful in domains with limited data and accelerates training. Common in image classification (e.g., ResNet) and NLP (e.g., BERT).

11. How do you evaluate the performance of unsupervised models?

Unsupervised models are evaluated using metrics like

-

Silhouette Score

-

Davies-Bouldin Index

-

Inertia (for K-Means)

-

Manual inspection for meaningful clustering. For dimensionality reduction, reconstruction error or explained variance may be used.

12. What are Graph Neural Networks (GNNs)?

GNNs are deep learning models designed to operate on graph data. They generalize neural networks to handle non-Euclidean data structures, allowing node-level, edge-level, or graph-level predictions. Applications include social networks and recommendation systems.

13. What is the role of autoencoders?

Autoencoders are neural networks trained to reconstruct their input. They are used for:

-

Dimensionality reduction

-

Denoising

-

Anomaly detection Variations include Variational Autoencoders (VAE) for generative tasks.

14. What is Bayesian inference, and how is it applied in ML?

Bayesian inference uses Bayes’ Theorem to update the probability estimate for a hypothesis as more evidence becomes available. It’s used in:

-

Naive Bayes classifiers

-

Bayesian neural networks

-

Probabilistic programming

15. What is the difference between online and batch learning?

-

Batch learning: Trains on the entire dataset at once; efficient but memory-intensive.

-

Online learning: Trains incrementally with new data; ideal for real-time systems and streaming data.

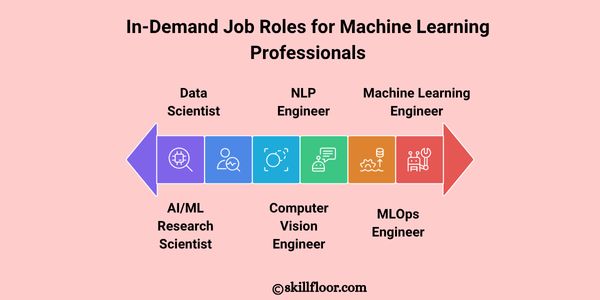

In-Demand Job Roles for Machine Learning Professionals

As the machine learning field grows, understanding the specific roles available helps you focus your career path. These job roles represent a wide range of responsibilities, from model development and deployment to business strategy and research. Here's a snapshot of the top roles in demand as of 2025:

Before diving into interviews, it's crucial to understand the landscape of job titles and roles you can pursue as a machine learning professional. Here are some of the top roles companies are hiring for in 2025:

-

Machine Learning Engineer – Designs, builds, and deploys ML models at scale.

-

Data Scientist – Analyzes large datasets and builds predictive models.

-

AI/ML Research Scientist – Works on cutting-edge research problems and model innovation.

-

Computer Vision Engineer – Develops ML models for image and video processing.

-

Natural Language Processing (NLP) Engineer – Focuses on building systems that understand and generate human language.

-

MLOps Engineer – Specializes in deploying, monitoring, and managing ML models in production.

Machine Learning Interview Questions for Freshers

1. What is the difference between AI and ML?

AI (Artificial Intelligence) is the broader concept of machines mimicking human intelligence. ML (Machine Learning) is a subset of AI where machines learn from data to make decisions. AI includes ML, robotics, and expert systems, whereas ML specifically deals with learning from patterns in data.

2. What are the key applications of machine learning?

ML is used in various domains such as

-

Recommendation systems (Netflix, Amazon)

-

Email spam filtering

-

Image and speech recognition

-

Credit scoring

-

Fraud detection

-

Chatbots and virtual assistants

3. What are the most commonly used ML algorithms?

Popular algorithms include:

-

Linear Regression

-

Logistic Regression

-

Decision Trees

-

k-Nearest Neighbors (k-NN)

-

Support Vector Machines (SVM)

-

Random Forest

-

Naive Bayes

4. What programming languages are best for ML?

Python is the most widely used language due to its simplicity and robust libraries (like Scikit-learn, TensorFlow, and PyTorch). R is also used for statistics-heavy tasks. Other languages include Java, C++, and Julia.

5. What is a training set and a test set?

-

Training set: Used to train the model by learning patterns.

-

Test set: Used to evaluate how well the model performs on unseen data. Typically, 70–80% of the data is used for training and 20–30% for testing.

6. What are supervised and unsupervised learning?

-

Supervised: The model learns using labeled data. E.g., classifying emails as spam or not.

-

Unsupervised: Model identifies patterns in data without labels. E.g., customer segmentation using clustering.

7. What are classification and regression?

Both are supervised learning:

-

Classification: Predicts categorical outputs (yes/no, true/false).

-

Regression: Predicts continuous outputs (prices, temperatures).

8. What is the importance of data preprocessing?

Preprocessing cleans and prepares raw data before feeding it into models. It includes:

-

Handling missing values

-

Encoding categorical variables

-

Scaling/normalizing data

-

Removing outliers ensures models perform accurately.

9. What is feature scaling, and why is it important?

Feature scaling transforms features to a similar scale, improving model performance, especially for algorithms that rely on distance metrics (e.g., k-NN, SVM). Techniques include Min-Max scaling and standardization.

10. What is the use of a confusion matrix in ML?

A confusion matrix shows model performance by comparing predicted vs. actual values. It helps compute metrics like accuracy, precision, recall, and F1-score. It's especially useful in classification problems.

11. How do you handle missing values?

Strategies include:

-

Removing rows/columns with missing data

-

Inputting with mean, median, or mode

-

Using algorithms that handle missing data natively (e.g., XGBoost)

12. What is model evaluation, and why is it necessary?

Model evaluation ensures that the model generalizes well to unseen data. Metrics like accuracy, precision, recall, F1-score, and ROC-AUC are used to evaluate classification models; RMSE or MAE for regression models.

13. What are some real-life examples of ML in daily life?

-

Google Maps traffic prediction

-

Voice assistants (Siri, Alexa)

-

Face detection in smartphones

-

Social media feed recommendations

-

Smart email categorization

14. What is an epoch in machine learning?

An epoch refers to one full pass of the training dataset through the model. In deep learning, models typically train over many epochs to learn patterns effectively.

15. How can I start a career in ML as a fresher?

-

Learn Python and ML fundamentals

-

Complete online courses and certifications (Coursera, Skillfloor, etc.)

-

Work on small projects (e.g., Titanic dataset)

-

Practice on Kaggle

-

Build a GitHub portfolio

-

Apply for internships to gain hands-on experience

Machine Learning Interview Questions for Experienced

1. How do you approach model interpretability in complex ML models?

Model interpretability is critical in high-stakes environments such as healthcare and finance. Experienced professionals often use tools like SHAP (SHapley Additive exPlanations), LIME (Local Interpretable Model-agnostic Explanations), and feature importance metrics to explain model predictions. This enhances trust and facilitates compliance with regulatory frameworks.

2. Describe your experience with deploying machine learning models in production.

Deploying ML models involves:

-

Model packaging (e.g., using Pickle, ONNX)

-

Creating REST APIs (e.g., Flask, FastAPI)

-

Containerization (Docker)

-

CI/CD pipelines

-

Monitoring (model drift, latency, accuracy). Tools like MLflow, Airflow, and Kubernetes help in managing deployment pipelines effectively.

3. What is A/B testing, and how is it used in ML?

A/B testing is used to compare two versions of a model or system to identify the better performer. It ensures that performance improvements are statistically significant. In ML, it helps validate model changes before full deployment.

4. How do you handle data leakage?

Data leakage occurs when information from outside the training dataset is used to create the model, leading to overly optimistic performance. It’s avoided by:

-

Careful feature engineering

-

Ensuring the separation of training and test data

-

Applying preprocessing steps only on the training data

5. Describe your approach to hyperparameter tuning at scale.

Experienced ML engineers use tools like GridSearchCV, RandomSearchCV, Optuna, or Ray Tune to automate tuning across distributed compute environments. Bayesian optimization techniques often outperform grid or random searches in high-dimensional spaces.

6. What are the challenges of working with imbalanced datasets?

Imbalanced data leads to biased models. Techniques to address this include:

-

Resampling (SMOTE, undersampling)

-

Using class weights

-

Choosing appropriate metrics (AUC-ROC, Precision-Recall curve)

-

Ensemble methods like Balanced Random Forests

7. How do you approach feature engineering for time-series data?

Time-series feature engineering includes:

-

Lag variables

-

Rolling averages

-

Trend and seasonality decomposition

-

Holiday/event encoding It requires maintaining the temporal order to avoid look-ahead bias.

8. What strategies do you follow for scaling ML workflows?

Strategies include:

-

Distributed training (e.g., Horovod, Dask)

-

Model parallelism

-

Using cloud services (AWS SageMaker, GCP AI Platform)

-

Efficient data pipelines (e.g., Apache Beam, Spark)

9. What is your experience with MLOps?

MLOps involves practices for automating and monitoring the ML lifecycle. It includes:

-

Version control (DVC, Git)

-

Automated pipelines (Airflow, Kubeflow)

-

Model registry (MLflow)

-

Monitoring (Prometheus, Grafana) It ensures reproducibility, scalability, and governance.

10. How do you manage drift in production ML models?

Drift management includes:

-

Monitoring data distribution over time

-

Re-training triggers based on thresholds

-

Drift detection algorithms (e.g., Kolmogorov–Smirnov test)

-

Regular model retraining and validation

11. What is your experience with ensemble learning in real-world projects?

Ensemble learning helps improve accuracy and robustness. Techniques include:

-

Bagging (Random Forest)

-

Boosting (XGBoost, LightGBM)

-

Stacking multiple model types. Custom ensembles using weighted averaging or meta-models are often used in competitions and real-world systems.

12. How do you handle real-time inference requirements?

Real-time inference demands low latency. Strategies include:

-

Using lightweight models

-

Model quantization

-

Caching predictions

-

Serving via low-latency frameworks (e.g., TensorFlow Serving, TorchServe)

-

Edge deployment using TensorFlow Lite or ONNX

13. What’s your process for selecting evaluation metrics for different use cases?

Metric selection depends on the business goal:

-

Classification: Precision, Recall, F1, AUC-ROC

-

Regression: RMSE, MAE, R²

-

Ranking: Mean Reciprocal Rank (MRR), NDCG

-

Recommendation: Hit rate and MAP trade-offs are evaluated based on domain-specific KPIs.

14. How do you balance exploration and exploitation in reinforcement learning?

Balancing is handled via:

-

Epsilon-greedy strategies

-

Upper Confidence Bound (UCB)

-

Thompson Sampling. These strategies ensure the model doesn't converge too early or explore indefinitely.

15. Describe a challenging ML project and how you solved it.

(Answer varies by candidate but should include):

-

Problem context

-

Data acquisition and challenges

-

Modeling techniques

-

Deployment strategy

-

Metrics achieved

-

Lessons learned and improvements

Cracking a machine learning engineer interview in 2025 requires more than just theoretical knowledge—it demands practical experience, deep understanding of algorithms, and familiarity with deployment workflows, tools, and trends. Whether you're just starting your career or have years of experience, continuous learning is essential. This blog has aimed to provide a structured approach with real-world questions and detailed answers across all skill levels.

As machine learning continues to evolve rapidly with advancements in generative AI, edge computing, and MLOps, staying updated is vital. Build projects, contribute to open-source, collaborate with other professionals, and never stop exploring. Let this guide serve as both a foundation and a roadmap for your ML journey—from interview rooms to solving real-world business problems.

Stay curious. Keep learning. And go land that dream ML job.