Let’s Explore the Different Types of Clustering

Learn how clustering groups similar data, finds patterns, and turns unorganized information into something easier to understand, manage, and use in real life.

Are you trying to find a simple method to arrange a lot of disorganized data? Clustering, which is similar to organizing your family, food, and vacation images into different albums, is an effective method that puts related objects together. Even when working with new data, it facilitates the understanding of information and helps identify trends.

There are a lot of various ways to categorize data, which may be confusing, particularly since they all operate differently. Cluster analysis is extremely beneficial in this situation. Grouping related objects provides an easy way to comprehend patterns. Anyone can learn it without getting bogged down in technical jargon or complicated concepts if they follow easy steps and take the appropriate approach.

Clustering doesn't have to be difficult or complicated. It all comes down to putting related items together to make information easier to comprehend. To understand it, you do not need to be an expert in data. Learning that is easy to understand and boosts your confidence is what counts. Successful explanations have to feel more like a helpful guide than a riddle.

What is Clustering?

Clustering is the process of putting related objects in one group to make them simpler to deal with and comprehend. Clustering facilitates intelligent organization, much like when you sort your clothes into piles (shirts, pants, and socks). When you have a lot of data but unclear labeling, you often utilize it.

This technique makes it easier for you to spot patterns or trends that might not be immediately apparent. Clustering identifies what belongs together, whether you're working with text, statistics, or pictures. It’s a basic principle that makes vast, complicated material much easier to grasp, especially for novices.

Why Clustering Matters

-

Makes Data Easy to Understand: Clustering facilitates the division of large data sets into more manageable, related groups. Because of this, it is simpler to read, investigate, and comprehend the material without becoming bogged down in too much specifics.

-

Helps Find Patterns: When data is properly grouped, common patterns and relationships become apparent. Even if it wasn't previously obvious, clustering helps identify similarities or patterns in behavior.

-

Saves Time: Data sifting by hand might take hours. Clustering saves you time and allows you to concentrate more on the meaning of the groups by swiftly grouping related items together.

-

Improves Decision-Making: Making better decisions is aided by seeing distinct groupings. Clustering provides you with accurate information that helps you make smarter judgments rather than relying just on educated assumptions, whether you're researching your consumers or planning a project.

-

Useful in Many Areas: Clustering is useful for many real-world tasks, such as organizing photos, researching shopping habits, or analyzing academic performance. Anytime something needs to be categorized and made easier to understand, this tool is useful in Machine Learning and beyond.

-

Spots Unusual Things: There are instances when something doesn't belong in any group. Clustering aids in identifying these strange bits, which may indicate errors, uncommon occurrences, or even fresh prospects deserving of further investigation.

Applications of Clustering

-

Customer Segmentation: Businesses can use clustering to group customers according to spending patterns, preferences, or habits. This enables them to better understand the needs of each kind of customer, develop more individualized offers, and enhance services.

-

Market Research: Clustering identifies groupings of people who share similar beliefs or habits in surveys or research. When developing new products or launching campaigns, this aids businesses or researchers in concentrating on particular audiences.

-

Image Grouping: Clustering is frequently used by photo programs to group images, such as grouping family faces or all beach shots together. This facilitates faster and more pleasurable photo library searching and organization.

-

Document Organization: Clustering facilitates the organization of hundreds of documents or emails into similar subjects. You can swiftly locate what you're looking for without having to read everything due to this time-saving method.

-

Anomaly Detection: Clustering can be used to identify the data points that do not fit the pattern. This is highly helpful for identifying equipment malfunctions, detecting bank fraud, or identifying mistakes before they become more serious issues.

-

Medical Diagnosis: Researchers and medical professionals use clustering to put patients with comparable test results or symptoms together. Using common health patterns, it aids in the creation of better treatment programs and the detection of early warning indications in Machine Learning in Healthcare.

-

Social Network Analysis: Groups of friends or others with similar interests can be found on social media platforms through clustering. In addition to helping platforms more precisely recommend new friends, sites, or material, it demonstrates how people connect.

-

Recommendation Systems: Streaming and shopping services use clustering to make recommendations. By putting customers with similar preferences together and suggesting products that they liked, they make browsing more enjoyable and helpful.

-

Traffic Pattern Analysis: Clustering is a technique used by city planners to examine how people or cars navigate a city. It assists in identifying areas of high traffic and improves decision-making when it comes to road construction or traffic light changes.

-

Genetic Research: To identify patterns in gene data, scientists employ clustering. It aids in the understanding of diseases, the discovery of novel gene traits, and the identification of genes that behave similarly in studies of illness or treatment.

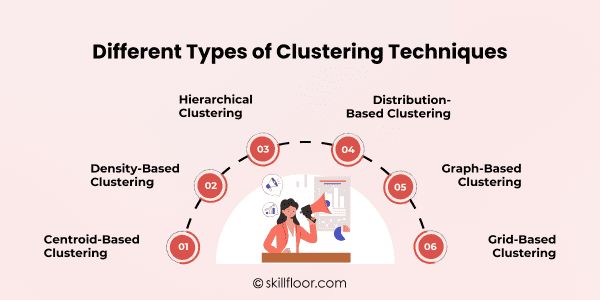

Different Types of Clustering Techniques

1. Centroid-Based Clustering

This approach locates the center of each cluster to group objects. It helps sort related things according to proximity and functions best when the data forms circular groupings.

-

K-Means Clustering: Each item is assigned to the closest group center using this approach, which selects a number of them. When you already know how many groups you need, it's quick and practical.

-

K-Medoids Clustering: Like K-Means, except it selects actual items as centers rather than the average center. As a result, it is more resilient to strange data points or poorly fitting values.

2. Density-Based Clustering

Density-Based Clustering determines groups by comparing the proximity of data points to one another. Points are labeled as noise if they are widely apart. It works well at identifying organic clusters of various sizes and forms, even in messy data.

-

DBSCAN (Density-Based Spatial Clustering of Applications with Noise): DBSCAN looks for regions with densely packed points to group data. Finding oddly shaped clusters and identifying items that don't fit—which are labeled as noise or outliers—are two of its strong points.

-

OPTICS (Ordering Points to Identify the Clustering Structure): OPTICS is comparable to DBSCAN, but it performs better when clusters have different sizes or shapes. Although it doesn't explicitly create groups, it does illustrate their structure, which makes it easier to see how closely related the points are.

3. Hierarchical Clustering

Hierarchical Clustering gradually creates a tree of groupings. It can begin with tiny groups and link them from the bottom up, or it might begin with all of them together and divide them from the top down. When you are unsure of the initial number of clusters, it is useful.

-

Agglomerative Clustering: The technique of agglomerative clustering is bottom-up. Until everything is connected, it starts with each item in its group and continues to join the nearest ones. In hierarchical clustering, this form is most frequently utilized.

-

Divisive Clustering: The reverse is true for Divisive Clustering. It begins by putting all of the objects in one large group and then continues to divide them into smaller groups according to their distinctions. It's less popular yet helpful for providing concise explanations.

-

Linkage Methods: Various linkage techniques (such as single, complete, and average) are used in hierarchical clustering to decide how to combine clusters according to the separations between data points.

4. Distribution-Based Clustering

The Distribution-Based Data is grouped using clustering according to the likelihood that each point fits into a particular pattern or shape, most frequently a bell-shaped curve. When clusters may overlap and you want more adaptable groupings, it's useful.

-

Gaussian Mixture Models (GMM): A combination of soft curves is used by Gaussian Mixture Models to identify data groups. Every point has a varied likelihood of belonging to more than one category. It functions best when there isn't a clear separation of the data.

5. Graph-Based Clustering

Graph-Based Clustering creates a network out of data, with each item representing a point connected to other points according to their degree of similarity. It then seeks for closely related groupings; in many ways a person may discover small groups of friends in a large crowd. It functions well with data about relationships, such as those found in web links or social networks.

-

Spectral Clustering: Spectral clustering creates a graph in which related points are linked. It then cleverly cuts the graph to find groups. For data with intricate relationships, such as social networks, it performs admirably.

6. Grid-Based Clustering

Grid-Based Clustering creates a checkerboard-like division of the data space into tiny square segments. The data is then grouped within those sections. This approach is quick and effective when dealing with big data sets. When your data fits nicely into a grid structure and you need results quickly, it's ideal.

Selecting the Right Clustering Technique for Your Data

-

Know Your Data Shape: Use K-Means or similar techniques if your data clusters into distinct, spherical groups. However, density-based techniques that can manage more organic, irregular patterns should be used if the groups have strange forms.

-

Check for Noise or Outliers: Points in some data don't belong anywhere. If so, use DBSCAN or HDBSCAN, which are able to identify and distinguish these strange points from appropriate groupings.

-

Look at the Size of Your Data: Because grid-based techniques like STING are quick, they are effective for large datasets. Other methods may be too heavy or slow when handling large amounts of data.

-

Start With Clean Data: Clean input is necessary for good results. Because of this, data preprocessing is important; fixing errors, scaling values, or filling in missing information can improve the accuracy and comprehensibility of clustering.

-

Check If Groups May Overlap: Use GMM when things may belong to more than one category, such as people who have a variety of hobbies. Instead of making every item fit into a single cluster, it provides soft groupings.

-

Think About the Type of Data: Graph-based clustering techniques, like Spectral Clustering, are more appropriate than location- or shape-based techniques if your data is grounded in relationships, such as friendships or links.

Common Mistakes to Avoid in Clustering

-

Clean Your Data First: Poor results may result from launching into clustering without first eliminating errors or missing values. To obtain more precise classifications, always clean and prepare your data before beginning.

-

Pick Right Number of Groups: Important patterns may be obscured if too few or too many groups are chosen. To determine which number makes the most sense for your data, experiment with several values and compare the outcomes.

-

Scale Features Before Clustering: Clustering may get confusing if characteristics, such as age and wealth, have various ranges. When creating groups, scaling your data aids in treating every feature equitably.

-

Try Different Clustering Methods: No single approach works for every task. Before choosing the clustering method that best suits your needs, try a few alternative approaches because different data require different approaches.

-

Check If Groups Make Sense: Clustering algorithms alone are insufficient. Always use summaries or visuals to ensure that the groups make sense and that your findings are applicable.

-

Watch Out for Outliers: By creating misleading clusters or removing group centers, outliers can have an impact on clustering findings. These odd spots should be identified and dealt with either before or throughout the procedure.

Putting similar things together helps you make sense of disorganized information. Clustering is like bringing order to chaos. Clustering is a straightforward yet effective method for identifying patterns in anything from customer information to images to traffic patterns. Selecting the approach that best suits your data and objective is crucial given the abundance of options. Spending time cleaning your data, trying out different strategies, and avoiding common errors may have a significant impact. The best thing about it? To begin, you don't have to know a lot about technology. Anyone can utilize clustering to transform jumbled data into understandable, practical insights with a careful approach.