Clustering in Machine Learning: A Simple Pattern Guide

Learn clustering in machine learning with simple patterns, real-world examples, intuition to uncover hidden insights from your data and make smarter decisions.

Have you ever felt overwhelmed when looking at a large dataset? You know there is valuable information hidden within it—opportunities, trends, and patterns. However, all you see is noise. Many ideas get lost or become unclear in the gap between the data and actionable insights.

While the term "clustering" may appear complex in the context of machine learning, it is fundamentally a human concept. Every day, we categorize tasks, friends, and photos to simplify our lives. In essence, machines allow us to perform this same task more efficiently, handling larger amounts of data and reducing errors.

This is more than simply a tool if you work in data, product, marketing, or team leadership. It's a fresh perspective on your work. In a muddle, it helps you find order. It enables you to make wiser decisions. Let's keep things simple and practical.

So… What Is Clustering?

Clustering puts things that are alike together without being told what to put them in. The algorithm looks for patterns on its own after you provide it with the data. Putting comparable objects near together and distinct ones far apart is the basic goal.

This concept aids computers in locating hidden groups in massive data sets through machine learning clustering. It learns solely from structure and operates without labels. This makes it helpful when patterns are waiting to be discovered, but answers are still unclear.

Why Clustering Matters More Than You Think

By revealing hidden patterns in disorganized data, clustering enables you to make smarter decisions, gain a deeper understanding of consumers, and identify opportunities that others overlook or discover too late.

1. Better Customer Understanding

In order for marketing, sales, and product teams to communicate with the appropriate people in the right way, it assists companies in grouping clients based on actual behavior rather than conjecture.

2. Find Hidden User Types

By identifying hidden user categories that reports and charts frequently overlook, it assists teams in creating better features and resolving actual issues rather than hypothetical ones.

3. Save Time and Effort

By breaking out big, complicated data sets into manageable segments that are simpler to analyze, comprehend, and apply to wise choices, it saves time.

4. Spot Problems Early

It aids in the early detection of odd or dangerous occurrences, such as fraud or mistakes, before they develop into significant issues that cost money or confidence.

5. Improve Personal Experience

By knowing what individuals truly like, rather than just what they clicked on once or twice, it enables personalized offers and content.

6. Make Better Decisions

It provides leaders with a better understanding of the actual situation, enabling them to make plans based on facts rather than just intuition or ingrained tendencies.

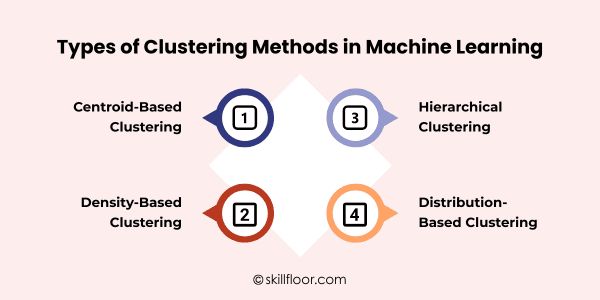

Different Types of Clustering Methods in Machine Learning

These techniques, known as types of clustering, assist in revealing hidden structures, patterns, and correlations inside complicated datasets by grouping comparable data points into meaningful groups in machine learning.

1. Centroid-Based Clustering

Centroid-based clustering finds a center point for each collection of data. Each item is arranged in the group with the closest center. This approach is most effective when groups are simple, well-defined, and about equal in size.

Algorithms

-

K-Means: Data is grouped by positioning points close to the nearest center and repeatedly updating centers until the groupings cease to change.

-

K-Medoids: It is comparable to K-Means, but it is more stable when dealing with noisy data because it employs actual data points as centers.

Pros

-

Easy to understand and simple to use

-

Works fast even with very large data

-

Good for clean and well-shaped groups

-

Simple to explain to non-technical teams

Cons

-

You must choose number of groups first

-

Sensitive to outliers and noisy data

-

Struggles with complex or uneven shapes

-

Results change based on starting points

2. Density-Based Clustering

Density-based clustering looks for regions where data points are densely packed in order to identify groups. Sparse areas are considered noise or outliers. Finding groups with odd or irregular forms and handling chaotic data are two areas where this strategy excels.

Algorithms

-

DBSCAN: Identifies clusters by determining whether points are near together and dense, then automatically labels points in blank spaces as noise.

-

HDBSCAN: An enhanced version of DBSCAN that detects more stable clusters with less manual setup and manages various densities better.

Pros

-

Finds clusters with any shape easily

-

Automatically detects noise and outliers

-

No need to set number of clusters

-

Works well with messy real-world data

Cons

-

Sensitive to distance and density settings

-

Struggles when densities vary too much

-

Can be slower on very large data

-

Hard to tune settings for beginners

Data is grouped via hierarchical clustering, which creates a tree of groups inside groupings. Joining small groups or dividing larger ones is the first step. When you wish to observe data at several levels and comprehend the relationships between groups, this approach is helpful.

Algorithms

-

Agglomerative: Starts with each point as its own group, then slowly joins the closest groups together until everything becomes one big group.

-

Divisive: Starts with all points in one group and keeps splitting them into smaller groups step by step.

Pros

-

No need to choose number of groups first

-

Easy to understand using tree diagrams

-

Shows data structure at many levels

-

Good for exploring unknown data

Cons

-

Slow for very large data sets

-

Hard to fix mistakes once made

-

Sensitive to noise and outliers

-

Uses a lot of memory for big data

4. Distribution-Based Clustering

Distribution-based clustering groups data based on the assumption that it originates from various patterns or forms of data. Every point can be a part of more than one group. When groups overlap, and distinct edges are difficult to perceive, this approach works effectively.

Algorithms

-

Gaussian Mixture Model (GMM): Provides each point a chance value for belonging to each group based on the assumption that the data comes from multiple bell-shaped groupings.

-

Bayesian Mixture Models: Like GMM, except in many situations, it can determine the correct number of groups by itself.

Pros

-

Allows soft grouping with chance values

-

Works well with overlapping groups

-

Handles complex data patterns better

-

Gives more detailed and flexible results

Cons

-

More complex and harder to understand

-

Needs more computing time and power

-

Depends on correct model assumptions

-

Can give wrong results with poor data

How to Choose the Right Clustering Method in Machine Learning

The size, form, and objective of your data will determine which clustering technique is best. One ideal option does not exist. The approach that best suits your data and situation is the best one.

1. Messy or Noisy Data

Machine learning techniques, such as density-based algorithms, can assist in identifying true groupings and automatically discarding irrelevant points in data that are disorganized and contain noise or unusual values.

2. Clean and Round

Even on very huge data sets, centroid-based algorithms perform well and provide quick results if your data groupings appear circular and tidy.

3. Unknown Group Count

Hierarchical approaches can clearly display the entire structure if you wish to examine data at multiple levels and do not know how many groups there are.

4. Overlapping Groups

Distribution-based approaches are a better and more intelligent option if your data groupings overlap and you wish to know chances rather than fixed labels.

5. Speed Matters Most

Simple and quick solutions are typically the preferable choice if your data set is very vast and speed is more important than exact shape.

6. Easy to Explain

Choose simple methods rather than intricate models if your outcomes need to be basic enough for business teams to understand.

How Do You Know If Clustering Is “Good”?

Finding the ideal response is not the goal of clustering. Finding helpful groups that improve your comprehension of content and enable you to make wiser decisions in actual work is the goal.

1. Visual Check

Examine basic graphs or charts in machine learning to determine whether the groups make sense, appear distinct, and exhibit an understandable pattern.

2. Group Separation

To verify that the groupings are indeed distinct and significant, measure how close points are within one group and how far they are from other groups.

3. Score Based Tests

To gauge how well points fit into their own group in relation to other groups, use straightforward scores in machine learning models like silhouette.

4. Business Use Value

The most effective clusters in machine learning projects aid teams in making decisions, enhance products, and gain a deeper understanding of consumer behavior.

5. Stable Results

Run the process again in machine learning systems to see whether you obtain similar groups, which indicates that the outcome is not arbitrary or dependent on chance.

6. Real World Testing

Test clusters in machine learning applications by applying them to actual tasks and determining whether they actually enhance planning and outcomes.

Real-World Applications of Clustering

By identifying hidden patterns, enabling more intelligent judgments across industries, and simplifying the comprehension, analysis, and practical application of large datasets, clustering aids in the transformation of raw data into insightful knowledge.

1. Customer Segmentation

Clustering helps organizations tailor marketing techniques and enhance total customer engagement by grouping clients based on their behavior, preferences, and past purchases.

2. Image Segmentation

By dividing images into relevant regions, clustering makes it possible for computers to more precisely identify objects, borders, and significant visual patterns.

3. Document Grouping

Large document collections are grouped into categories by clustering, which speeds up and improves the effectiveness of search engines, recommendations, and content management.

4. Fraud Detection

By identifying anomalous transaction patterns, clustering assists financial organizations in spotting suspicious activity, stopping fraud, and lowering losses.

5. Social Network Analysis

By grouping users based on their interactions and interests, clustering enables platforms to find communities, suggest connections, and examine trends in the flow of information.

6. Medical Data Grouping

Clustering helps in disease analysis, diagnosis support, treatment planning, and advancements in healthcare research by grouping patient records based on shared characteristics.

Common Mistakes Beginners Make

Beginners frequently assume that every dataset inherently has clusters, misuse techniques, choose incorrect cluster numbers, overlook noise, and forget to scale data.

1. Not Scaling Data

When feature scaling is disregarded, certain variables take precedence over others, creating false clusters and inaccurate results for pattern recognition.

2. Choosing the Wrong K

Unnatural groupings are forced by choosing the wrong amount of clusters, which makes the findings unclear, erratic, and challenging to correctly interpret.

3. Using K-Means Everywhere

When K-Means is applied carelessly, it ignores data complexity, noise, and form, which frequently results in subpar clustering outcomes in practical situations.

4. Ignoring Noise and Outliers

When noisy data points are ignored, cluster boundaries are distorted, accuracy is decreased, and significant underlying data patterns are concealed.

5. Assuming Clusters Always Exist

Not all datasets have natural groups, and forced clustering can lead to false findings and deceptive patterns.

6. Not Validating Clustering Results

It is challenging to assess cluster quality, utility, and dependability for practical decision making when validation procedures are neglected.

Finally, dealing with data doesn't have to be frightening or perplexing. Clustering in Machine Learning teaches you to recognize groups when others perceive noise. It enables you to see things you may overlook and ask more insightful questions. Clustering in Machine Learning over time becomes more about your way of thinking than it is about the tools. You begin to notice trends in users, tasks, and outcomes. And that alters your decision-making and planning process. To start with, you don't have to be flawless. All you have to do is be open to trying and intrigued. Clustering in Machine Learning can assist you in gradually transforming disorganized data into coherent concepts and practical applications.